Authorship Forensics from Twitter Data

A Year Ago Today

On September 5 of last year, The New York Times took the unusual step of publishing an op-ed anonymously. It began “I Am Part of the Resistance inside the Trump Administration,” and quickly became known as the “Resistance” op-ed. From the start, there was wide‐ranging speculation as to who might have been the author(s); to this day, that has not been settled. (Spoiler alert: it will not be settled in this blog post, either. But that’s getting ahead of things.) When I learned of this op-ed, the first thing that came to mind, of course, was, “I wonder if authorship attribution software could….” This was followed by, “Well, of course it could. If given the right training data.” When time permitted, I had a look on the internet into where one might find training data, and for that matter who were the people to consider for the pool of candidate authors. I found at least a couple of blog posts that mentioned the possibility of using tweets from administration officials. One gave a preliminary analysis (with President Trump himself receiving the highest score, though by a narrow margin—go figure). It even provided a means of downloading a dataset that the poster had gone to some work to cull from the Twitter site.

The code from that blog was in a language/script in which I am not fluent. My coauthor on two authorship attribution papers (and other work), Catalin Stoean, was able to download the data successfully. I first did some quick validation (to be seen) and got solid results. Upon setting the software loose on the op-ed in question, a clear winner emerged. So for a short time I “knew” who wrote that piece. Except. I decided more serious testing was required. I expanded the candidate pool, in the process learning how to gather tweets by author (code for which can be found in the downloadable notebook). At that point I started getting two more-or-less-clear signals. Okay, so two authors. Maybe. Then I began to test against op-eds of known (or at least stated) authorship. And I started looking hard at winning scores. Looking into the failures, it became clear that my data needed work (to be described). Rinse, repeat. At some point I realized: (1) I had no idea who wrote the anonymous op-ed; and (2) interesting patterns were emerging from analysis of other op-eds. So it was the end of one story (failure, by and large) but the beginning of another. In short, what follows is a case study that exposes both strengths and weaknesses of stylometry in general, and of one methodology in particular.

Stylometry Methodology

In two prior blog posts, I wrote about determining authorship, the main idea being to use data of known provenance coupled with stylometry analysis software to deduce authorship of other works. The first post took up the case of the disputed Federalist Papers. In the second, as a proof of concept I split a number of Wolfram blog posts into training and test sets, and used the first in an attempt to verify authorship in the second. In both of these, the training data was closely related to the test sets, insofar as they shared genres. For purposes of authorship forensics, e.g. discovering who wrote a given threatening email or the like, there may be little or nothing of a similar nature to use for comparison, and so one must resort to samples from entirely different areas. Which is, of course, exactly the case in point.

Let’s begin with the basics. The general idea is to use one or more measures of similarity between works of known authorship to a given text in order to determine which author is the likeliest to have written that text. The “closed” problem assumes it was in fact written by one of the authors of the known texts. In the real world, one deals with the “open” variant, where the best answer might be “none of the above.”

How does one gauge similarity? Various methods have been proposed, the earliest involving collection of various statistics, such as average sentence length (in words and/or characters), frequency of usage of certain words (common and uncommon words both can play important roles here), frequency of certain character combinations and many others. Some of the more recent methods involve breaking sentences into syntactic entities and using those for comparison. There are also semantic possibilities, e.g. using frequencies of substitution words. Overall, the methods thus break into categories of lexical, syntactic and semantic, and each of these has numerous subcategories as well as overlap with the other categories.

To date, there is no definitive method, and very likely there never will be. Even the best class is not really clear. But it does seem that, at this time, certain lexical‐based methods have the upper hand, at least as gauged by various benchmark tests. Among these, ones that use character sequence (“n‐gram”) frequencies seem to perform particularly well—and is the method I use for my analyses.

Origin of the Method in Use

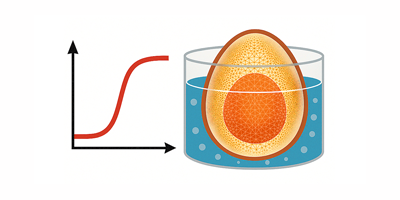

It is an odd duckling, as n‐gram methods go. We never really count anything. Instead we turn text samples into images, and use further processing on the images to obtain a classifier. But n‐gram frequencies are implicit to the method of creating images. The process extends Joel Jeffrey’s “Chaos Game Representation” (CGR), used for creating images from genetic nucleotide sequences, to alphabets of more than four characters. Explained in the CGR literature is the “Frequency Chaos Game Representation” (FCGR), which creates a pixelated grayscale array for which the darkness of pixels corresponds to n‐gram frequencies.

What with having other projects—oh, and that small matter of a day job—I put this post on the back burner for a while. When I returned after a few months, I had learned a few more tricks. The biggest was that I had seen how very simple neural networks might be trained to distinguish numeric vectors even at high dimension. As it happens, the nearest image code I had written and applied to FCGR images creates vectors in the process of dimension reduction of the images. This can be done by flattening the image matrices into vectors and then using the singular values decomposition to retain the “strong” components of the vectorized images. (There is an optional prior dimension-reduction step, using Fourier trig transform and retaining only low-frequency components. It seems to work well for FCGR images from genomes, but not so well for our extension of FCGR to text.) We then trained neural network classifiers on FCGR images produced from text training sets of several authorship benchmark suites. Our test results were now among the top scores for several such benchmarks.

Obtaining and Cleaning Text from Twitter

As I’ve mentioned, experiments with Twitter data showed some signs of success. I did need to expand the author pool, though, since it seemed like a good idea to include any number of administration officials who were—let us not mince words—being publicly accused by one or another person of having written the op-ed in question. It also seemed reasonable to include family members (some of whom are advisors in an official capacity), undersecretaries and a few others who are in some sense high-ranking but not in official appointments. So how to access the raw training data? It turns out that the Wolfram Language has a convenient interface to Twitter. I had to first create a Twitter account (my kids still can’t believe I did this), and then evaluate this code:

Engage with the code in this post by downloading the Wolfram Notebook

Engage with the code in this post by downloading the Wolfram Notebook

|

✕

twitter = ServiceConnect["Twitter", "New"]; |

A webpage pops up requiring that I click a permission box, and we’re off and running:

Now I can download tweets for a given tweeter (is that the term?) quite easily. Say I want to get tweets from Secretary of State Mike Pompeo. A web search indicates that his Twitter handle is @SecPompeo. I can gather a sample of his tweets, capping at a maximum of 5,000 tweets so as not to overwhelm the API.

|

✕

secPompeoTweets = twitter["TweetList", "Username" -> "SecPompeo", MaxItems -> 5000]; |

Tweets are fairly short as text goes, and the methods I use tend to do best with a minimum of a few thousand characters for each FCGR image. So we concatenate tweets into strings of a fixed size, create FCGR images, reduce dimension and use the resulting vectors to train a neural net classifier.

A first experiment was to divide each author’s set of strings into two parts, train on one set and see how well the second set was recognized. The first thing I noticed was that underrepresented authors fared poorly. But this was really restricted to those with training data below a threshold; above that, most were recognized. So I had to exclude a few candidates on the basis of insufficient training data. A related issue is balance, in that authors with a huge amount of training data can sort of overwhelm the classifier, biasing it against authors with more modest amounts of training text. A heuristic I found to work well is to cap the training text size at around three times the minimum threshold. The pool meeting the threshold is around 30 authors (I have on occasion added some, found they do not show any sign of Resistance op-ed authorship and so removed them). With this pool, a typical result is a recognition rate above 90%, with some parameter settings taking the rate over 95%. So this was promising.

Authorship of Op-Eds Can Be Trickier Than You Would Expect

When set loose on op-eds, however, the results were quite lame. I started looking harder at the raw training data. Lo and behold, it is not so clean as I might have thought. Silly me, I had no idea people might do things with other people’s tweets, like maybe retweet them (yes, I really am that far behind the times). So I needed some way to clean the data.

You can find the full code in the downloadable notebook, but the idea is to use string replacements to remove tweets that begin with “RT”, contain “Retweeted” or have any of several other unwanted features. While at it, I remove the URL parts (strings beginning with “http”). At this point I have raw data that validates quite well. Splitting into training vs. validation gives a recognition rate of 98.9%.

Here’s a plot of the confusion matrix:

(To those for which this term is itself a confusion, this matrix indicates by color strength the positioning of the larger elements. The off‐diagonal elements indicate incorrect attributions, and so a strongly colored main diagonal is a sign that things are working well.)

Now we move on to a form of “transference,” wherein we apply a classifier trained on Twitter text to authorship assessment of op-eds. The first thing to check is that this might be able to give plausible results. We again do a form of validation, by testing against articles of known authorship. Except we do not really know who writes what, do we? For tweets, it seems likely that the tweeter is the actual owner of the Twitter handle (we will give a sensible caveat on this later); for articles, it is a different matter. Why? We’ll start with the obvious: high-level elected politicians and appointed officials are often extremely busy. And not all possess professional writing skills. Some have speechwriters who craft works based on draft outlines, and this could well extend to written opinion pieces. And they may have editors and technical assistants who do heavy revising. Ideas may also be shared among two or more people, and contributions on top of initial drafts might be solicited. On occasion, two people might agree that what one says would get wider acceptance if associated with the other. The list goes on, the point being that the claimed authorship can depart in varying degrees from the actual, for reasons ranging from quite legitimate, to inadvertent, to (let’s call it for what it is) shady.

Some Testing on Op-Ed Pieces

For the actual test data, I located and downloaded numerous articles available electronically on the internet, which appeared under the bylines of numerous high-ranking officials. As most of these are covered by copyright, I will need to exclude them from the notebook, but I will give appropriate reference information for several that get used explicitly here. I also had to get teaser subscriptions to two papers. These cost me one and two dollars, respectively (I wonder if Wolfram Research will reimburse me—I should try to find out). Early testing showed that tweaking parameters in the neural net training showed tradeoffs in strengths, so the protocol I used involved several classifiers trained with slightly different parameters, and aggregation of scores to determine the outcomes. This has at least two advantages over using just one trained neural net. For one, it tends to lower variance in outcomes caused by the randomness intrinsic to neural net training methods. The other is that it usually delivers outcomes that are near or sometimes even exceed the best of the individual classifiers. These observations came from when Catalin and I tested against some benchmark suites. It seems like a sensible route to take here as well.

Labor Secretary Alexander Acosta

We begin with Secretary of Labor Alexander Acosta. Because they were easy to find and download, I took for the test data two items that are not op-eds, but rather articles found at a webpage of White House work. One is from July 23, 2018, called “Reinvesting in the American Workforce.” The other is “Trump Is Strengthening US Retirement Security. Here’s How.” from August 31, 2018. The following graph, intentionally unlabeled, shows a relatively high score above 9, a second score around 6.5 and all the rest very close to one another at a bit above 5:

The high score goes to Secretary Acosta. The second-highest goes to Ivanka Trump. This might indicate a coincidental similarity of styles. Another possibility is she might have contributed some ideas to one or the other of these articles. Or maybe her tweets, like some of her op-ed articles, overlap with these articles in terms of subject matter; as a general remark, genre seems to play a role in stylometry similarities.

Ivanka Trump

Having mentioned Ivanka Trump, it makes sense to have a look at her work next. First is “Empower Women to Foster Freedom,” published in The Wall Street Journal on February 6, 2019. The plot has one score way above the others; it attributes the authorship to Ms. Trump:

The next is “Why We Need to Start Teaching Tech in Kindergarten” from The New York Post on October 4, 2017. The plot now gives Ms. Trump the second top score, with the highest going to Secretary Acosta. This illustrates an important point: we cannot always be certain we have it correct, and sometimes it might be best to say “uncertain” or, at most, “weakly favors authorship by candidate X.” And it is another indication that they share similarities in subject matter and/or style:

Last on the list for Ivanka Trump is “The Trump Administration Is Taking Bold Action to Combat the Evil of Human Trafficking” from The Washington Post on November 29, 2018. This time the plot suggests a different outcome:

Now the high score goes to (then) Secretary of Homeland Security Kirstjen Nielsen. But again, this is not significantly higher than the low scores. It is clearly a case of “uncertain.” The next score is for Ambassador to the United Nations John Bolton; Ms. Trump’s score is fourth.

Donald Trump Jr.

We move now to Donald Trump Jr. Here, I combine two articles. One is from The Denver Post of September 21, 2018, and the other is from the Des Moines Register of August 31, 2018. And the high score, by a fair amount, does in fact go to Mr. Trump. The second score, which is noticeably above the remaining ones, goes to President Trump:

Secretary of Health and Human Services Alex Azar

Our test pieces are “Why Drug Prices Keep Going Up—and Why They Need to Come Down” from January 29, 2019, and a USA Today article from September 19, 2018. The graph shows a very clear outcome, and indeed it goes to Secretary Alex Azar:

We will later see a bit more about Secretary Azar.

Secretary of Housing and Urban Development Ben Carson

Here, we can see that a USA Today article from December 15, 2017, is very clearly attributed to Secretary Ben Carson:

I also used “My American Dream” from Forbes on February 20, 2016, and a Washington Examiner piece from January 18, 2019. In each case, it is Secretary Azar who has the high score, with Secretary Carson a close second on one and a distant second on the other. It should be noted, however, that the top scores were not so far above the average as to be taken as anywhere approaching definitive.

Secretary of Education Betsy DeVos

I used an article from The Washington Post of November 20, 2018. An interesting plot emerges:

The top score goes to Secretary Betsy DeVos, with a close second for Secretary Azar. This might indicate a secondary coauthor status, or it might be due to similarity of subject matter: I now show another article by Secretary Azar that explicitly mentions a trip to a school that he took with Secretary DeVos. It is titled “Put Mental Health Services in Schools” from August 10, 2018:

In this instance she gets the top classifier score, though Azar’s is, for all intents and purposes, tied. It would be nice to know if they collaborate: that would be much better than, say, turf wars and interdepartmental squabbling.

Classification Failures

I did not include former US envoy Brett McGurk in the training set, so it might be instructive to see how the classifier performs on an article of his. I used one from January 18, 2019 (wherein he announces his resignation); the topic pertains to the fight against the Islamic State. The outcome shows a clear winner (Ambassador to the UN Bolton). This might reflect commonality of subject matter of the sort that might be found in the ambassador’s tweets:

Ambassador Bolton was also a clear winner for authorship of an article by his predecessor, Nikki Haley (I had to exclude Ms. Haley from the candidate pool because the quantity of tweets was not quite sufficient for obtaining viable results). Moreover, a similar failure appeared when I took an article by former president George W. Bush. In that instance, the author was determined to be Secretary Azar.

An observation is in order, however. When the pool is enlarged, such failures tend to be less common. The more likely scenario, when the actual author is not among the candidates, is that there is no clear winner.

Secretary of Transportation Elaine Chao

Here I used a piece in the Orlando Sentinel from February 15, 2018, called “Let’s Invest in US Future.” Summary conclusion: Secretary Elaine Chao (presumably the person behind the tweets from handle @USDOT) is a very clear winner:

President Donald Trump

An article under President Donald Trump’s name was published in the Washington Post on April 30, 2017. The classifier does not obtain scores sufficiently high as to warrant attribution. That stated, one of the two top scores is indeed from the Twitter handle @realDonaldTrump:

A second piece under the president’s name is a whitehouse.gov statement, issued on November 20, 2018. The classifier in this instance gives a clear winner, and it is President Trump. The distant second is Ambassador Bolton, and this is quite possibly from commonality of subject matter (and articles by the ambassador); the topic is US relations with Saudi Arabia and Iran:

USA Today published an article on October 10, 2018, under the name of President Donald Trump. The classifier gives the lone high score to Secretary Azar. Not surprisingly, there is overlap in subject insofar as the article was about health plans:

Moreover, when I use the augmented training set, the apparent strong score for Secretary Azar largely evaporates. He does get the top score in the following graph, but it is now much closer to the average scores. The close second is Senator Bernie Sanders. Again, I would surmise there is commonality in the subject matter from their tweets:

Some Others in Brief

A couple of pieces published under the name of Ambassador Bolton were classified fairly strongly as being authored by him. Two by (then) Secretary Nielsen were strongly classified as hers, while a third was in the “no clear decision” category. Similarly, an article published by Wilbur Ross is attributed to him, with two others getting no clear winner from the classifier. An article under Mike Pompeo’s name was attributed to him by a fair margin. Another was weakly attributed to him, with the (then) State Department Spokesperson Heather Nauert receiving a second place score well above the rest. Possibly one wrote and the other revised, or this could once again just be due to similarity of subject matter in their respective tweets. One article published under Sonny Perdue’s name is strongly attributed to him, with another weakly so. A third has Donald Trump Jr. and Secretary DeVos on top but not to any great extent (certainly not at a level indicative of authorship on their parts).

I tested two that were published under Director of the National Economic Council Lawrence Kudlow’s name. They had him a close second to the @WhiteHouseCEA Twitter handle. I will say more about this later, but in brief, that account is quite likely to post content by or about Mr. Kudlow (among others). So no great surprises here.

An article published under the name of Vice President Mike Pence was attributed to him by the classifier; two others were very much in the “no clear winner” category. One of those, an op-ed piece on abortion law, showed an interesting feature. When I augmented the training set with Twitter posts from several prominent people outside the administration, it was attributed to former Ambassador Hillary Clinton. What can I say? The software is not perfect.

Going outside the Current Administration

Two articles published under former president Bill Clinton’s name have no clear winner. Similarly, two articles under former president Barack Obama’s name are unclassified. An article under Bobby Jindal’s name correctly identifies him as the top scorer, but not by enough to consider the classification definitive. An article published by Hillary Clinton has the top score going to her, but it is too close to the pack to be considered definitive. An article published by Senate Majority Leader Mitch McConnell is attributed to him. Three articles published under Rand Paul’s name are attributed to him, although two only weakly so.

An article published under Senator Bernie Sanders’s name is very strongly attributed to him. When tested using only members of the current administration, Secretary Azar is a clear winner, although not in the off‐the‐charts way that Sanders is. This might, however, indicate some modest similarity in their styles. Perhaps more surprising, an article published by Joe Biden is attributed to Secretary Azar even when tweets from Biden are in the training set (again, go figure). Another is weakly attributed to Mr. Biden. A third has no clear winner.

Some Surprises

Mitt Romney

An op-ed piece under (now) Senator Mitt Romney’s name from June 24, 2018, and another from January 2, 2019, were tested. Neither had a winner in terms of attribution; all scores were hovering near the average. Either his tweets are in some stylistic ways very different from his articles, or editing by others makes substantial changes. Yet in a Washington Post piece under his name from January 8, 2017, we do get a clear classification. The subject matter was an endorsement for confirmation of Betsy DeVos to be the secretary of education:

The classifier did not, however, attribute this to Mr. Romney. It went, rather, to Secretary DeVos.

The Council of Economic Advisers

At the time of this writing, Kevin Hassett is the chairman of the Council of Economic Advisers. This group has several members. Among them are some with the title “chief economist,” and this includes Casey Mulligan (again at the time of this writing, he is on leave from the University of Chicago where he is a professor of economics, and slated to return there in the near future). We begin with Chairman Hassett. I took two articles under his name that appeared in the National Review, one from March 6, 2017, and the other from April 3, 2017:

The classifier gives the high score to the Twitter handle @WhiteHouseCEA and I had, at the time of testing, believed that to be used by Chairman Hassett.

I also used a few articles by Casey Mulligan. He has written numerous columns for various publications, and has, by his count, several hundred blog items (he has rights to the articles, and they get reposted as blogs not long after appearing in print). I show the result after lumping together three such; individual results were similar. They are from Seeking Alpha on January 4, 2011, a Wall Street Journal article from July 5, 2017, and one in The Hill from March 31, 2018. The classifier assigns authorship to Professor Mulligan here:

The fourth article under Mulligan’s name was published in The Hill on May 7, 2018. You can see that the high dot is located in a very different place:

This time the classifier associates the work with the @WhiteHouseCEA handle. When I first encountered the article, it was from Professor Mulligan’s blog, and I thought perhaps he had a posted a guest blog. That was not the case. This got my curiosity, but now I had a question to pose that might uncomfortably be interpreted as “Did you really write that?”

In addition to motive, I also had opportunity. As it happens, Casey Mulligan makes considerable (and sophisticated) use of Mathematica in his work. I’ve seen this myself, in a very nice technical article. He is making productive use of our software and promoting it among his peers. Time permitting, he hopes to give a talk at our next Wolfram Technology Conference. He is really, really smart, and I don’t know for a certainty that he won’t eat a pesky blogger for lunch. This is not someone I care to annoy!

A colleague at work was kind enough to send a note of mutual introduction, along with a link to my recent authorship analysis blog post. Casey in turn agreed to speak to me. I began with some background for the current blog. He explained that many items related to his blogs get tweeted by himself, but some also get tweeted from the @WhiteHouseCEA account, and this was happening as far back as early 2018, before his leave for government policy work in DC. So okay, mystery solved: the Twitter handle for the Council of Economic Advisers is shared among many people, and so there is some reason it might score quite high when classifying work by any one of those people.

Anonymous Op-Ed Pieces

The “Resistance” Op-Ed

I claimed from the outset that using the data I found and the software at my disposal, I obtained no indication of who wrote this piece. The plot shows this quite clearly. The top scores are nowhere near far enough above the others to make any assessment, and they are too close to one another to distinguish. Additionally, one of them goes to President Trump himself:

When I test against the augmented training set having Senators Sanders, McConnell and others, as well as Ambassador Clinton and Presidents Clinton and Obama, the picture becomes more obviously a “don’t know” scenario:

Scaling notwithstanding, these scores all cluster near the average. And now Senators Graham and Sanders are at the top.

“I Hope a Long Shutdown Smokes Out the Resistance”

On January 14, 2019, The Daily Caller published an op-ed that was similarly attributed to an anonymous senior member of the Trump administration. While it did not garner the notoriety of the “Resistance” op-ed, there was speculation as to its authorship. My own question was whether the two might have the same author, or if they might overlap should either or both have multiple authors.

The plot shows similar features to that for the “Resistance” op-ed. And again, there is no indication of who might have written it. Another mystery.

I will describe another experiment. For test examples, I selected several op-eds of known (or at least strongly suspected) authorship and also used the “Resistance” and “Shutdown” pieces. I then repeatedly selected between 8 and 12 of the Twitter accounts and used them for training a classifier. So sometimes a test piece would have its author in the training set, and sometimes not. In almost all cases where the actual author was represented in the training set, that person would correctly be ascribed the author of the test piece. When the correct author was not in the training set, things got interesting. It turns out that for most such cases, the training authors with the top probabilities overlapped only modestly with those for the “Resistance” op-ed; this was also the case for the “Shutdown” piece. But the ascribed authors for those two pieces did tend to overlap heavily (with usually the same top two, in the same order). While far from proof of common authorship, this again indicates a fair degree of similarity in style.

Parting Thoughts

This has been something of an educational experience for me. On the one hand, I had no sound reason to expect that Twitter data could be at all useful for deducing authorship of other materials. And I have seen (and shown) some problematic failures, wherein an article is strongly attributed to someone we know did not write it. I learned that common subject matter (“genre,” in the authorship attribution literature) might be playing a greater role than I would have expected. I learned that a candidate pool somewhat larger than 30 tends to tamp down on those problematic failures, at least for the subject matter under scrutiny herein. Maybe the most pleasant surprise was that the methodology can be made to work quite well. Actually, it was the second most pleasant surprise—the first being not having been eaten for lunch in that phone conversation with the (thankfully kindly disposed) economics professor.

| Analyze tweets and more with Wolfram|One, the first fully cloud-desktop hybrid, integrated computation platform. |

It might be good to—at least—explain the x-axes… vertical is some matching score, but horizontal is ?

Thanks for the question! The x-axis corresponds to the candidate pool in some order.