Automated Authorship Verification: Did We Really Write Those Blogs We Said We Wrote?

Several Months Ago…

I wrote a blog post about the disputed Federalist Papers. These were the 12 essays (out of a total of 85) with authorship claimed by both Alexander Hamilton and James Madison. Ever since the landmark statistical study by Mosteller and Wallace published in 1963, the consensus opinion has been that all 12 were written by Madison (the Adair article of 1944, which also takes this position, discusses the long history of competing authorship claims for these essays). The field of work that gave rise to the methods used often goes by the name of “stylometry,” and it lies behind most methods for determining authorship from text alone (that is to say, in the absence of other information such as a physical typewritten or handwritten note). In the case of the disputed essays, the pool size, at just two, is as small as can be. Even so, these essays have been regarded as difficult for authorship attribution due to many statistical similarities in style shared by Hamilton and Madison.

Since late 2016 I have worked with a coauthor, Catalin Stoean, on a method for determining authorship from among a pool of candidate authors. When we applied our methods to the disputed essays, we were surprised to find that the results did not fully align with consensus. In particular, the last two showed clear signs of joint authorship, with perhaps the larger contributions coming from Hamilton. This result is all the more plausible because we had done validation tests that were close to perfect in terms of correctly predicting the author for various parts of those essays of known authorship. These validation tests were, as best we could tell, more extensive than all others we found in prior literature.

Clearly a candidate pool of two is, for the purposes at hand, quite small. Not as small as one, of course, but still small. While our method might not perform well if given hundreds or more candidate authors, it does seem to do well at the more modest (but still important) scale of tens of candidates. The purpose of this blog post is to continue testing our stylometry methods—this time on a larger set of candidates, using prior Wolfram Blog posts as our data source.

That Was the Method That Was

As I gave some detail in the “Disputed Federalist Papers” blog (DFPb), I’ll recap in brief. We start by lumping certain letters and other characters (numerals, punctuation, white space) into 16 groups. Each of these is then regarded as a pair of digits in base 4 (this idea was explained, with considerable humor, in Tom Lehrer’s song “New Math” from the collection “That Was the Year That Was”). The base-4 digits from a given set of training texts are converted to images using a method called the Frequency Chaos Game Representation (FCGR, a discrete pixelation variant of Joel Jeffrey’s Chaos Game Representation). The images are further processed to give much smaller lists of numbers (technical term: vectors), which are then fed into a machine learning classifier (technical term: black magic). Test images are similarly processed, and the trained classifier is then used to determine the most likely (as a function of the training data and method internals) authorship.

Shortly after we posted the DFPb, I gave a talk about our work at the 2018 Statistical Language and Speech Processing (SLSP) conference (our paper appeared in the proceedings thereof). The session chair, Emmanuel Rayner, was kind enough to post a nice description of this approach. It was both complimentary to our work, and complementary to my prior DFPb discussion of the method (how often does one get to use such long homonyms in the same sentence?), with the added benefit that it is a more clear description than I have given. With his permission, I include a link to this write‐up.

So how well does this work? Short answer: quite well. It is among the highest-scoring methods in the literature on all of the standard benchmark tests that we tried (we used five, not counting the Federalist Papers, and that is more than one sees in most of the literature). There are three papers from the past two years that show results that surpass this one on some of these tests. These papers all use ensemble scoring from multiple methods. Maybe someday this one will get extended or otherwise incorporated into such a hybridized approach.

Some Notes on Implementation

The Wolfram Language code behind all this is not quite ready for general consumption, but I should give some indication of what is involved.

- We convert the text to a simpler alphabet by changing capitals to lowercase and removing diacritics. The important functions are aptly named ToLowerCase and RemoveDiacritics.

- Replacement rules do the substitutions needed to bring us to a 16-character “alphabet.” For example, we lump the characters {b,d,p} into one class using the rule "p"|"d" → "b".

- More replacements take this to base-4 pairs, e.g. {"g" → {0,0},"i" → {0,1},…}.

- Apply the Frequency Chaos Game Representation algorithm to convert the base-4 strings to two-dimensional images. It amazes me that a fast implementation, using the Wolfram Language function Compile, is only around 10 lines of code. Then again, it amazes me that functional programming constructs such as FoldList can be handled by Compile. Possibly I am all too easily amazed.

- Split the blog posts into training and test sets. Split individual posts further into multiple chunks (this is so we get sufficiently many inputs for the training).

- Process the training images into numeric vectors using the SingularValueDecomposition function (SVD, for short). This is commonly used in data science to reduce dimension. The wonderful thing is that it gives good results despite going from two dimensions to one.

- Use NetChain and NetTrain to construct and train a neural network to associate the numeric vectors with the respective blog authors.

- Use information from the SVD of step 6 to convert test images.

- Run the trained neural net to assess authorship of the test texts. This gives probability scores. We aggregate scores from all chunks associated with a particular blog, and the highest score determines the final guess at the authorship of that blog.

Preparing the Training Data

We turn now to the Wolfram blog posts. These have been appearing since around 2007, and many employees past and present have contributed to this collection. The blog posts are comprised of text, Wolfram Language code (input and output), and graphics and other images. For purposes of applying the code I developed, it is important to have only text; while the area of code authorship is interesting in its own right, and is a growing field, it is quite likely outside the capabilities of the method I use and definitely outside the scope of this blog post. My colleague Chapin Langenheim used blogspertise (something I lack) to work, and was able to provide me with text‐only data—that is, blog posts stripped of code and pictures (thanks, Chapin!).

As the methodology requires some minimal amount of training data, I opted to use only authors who had contributed at least four blog posts. Also, one does not want a large imbalance of data that might bias the classifier in training, so I restricted the data to a maximum of 12 blog posts per author. As it happens, some of the posts are not in any way indicative of the author’s style. These include interviews, announcements of Wolfram events (conferences, summer school programs), lists of Mathematica‐related new books, etc. So I removed these from consideration.

With unusable blog posts removed there are 10 authors with 8 or more published posts, 19 authors with 6 or more and 41 authors with at least 4. I will show results for all 3 groups.

It might be instructive to see images created from blog posts written by the group of most prolific authors. Here we have paired initial and final images from the training data used for each of those 10 authors.

The human eye will be unlikely to make out differences that distinguish between the authors. The software does a reasonable job, though, as we will soon see.

Results of the Blog Analysis

I opted to use around three-quarters of the blog posts from each author for training, and the remaining for testing. More specifically, I took the largest fraction that did not exceed three-quarters for training—so if an author wrote 10 posts, for example, then seven were used for training and three for testing. The selections were done at random, so there should be no overall effect of chronology, e.g. from using exclusively the oldest for training.

In order to give a larger set of training data inputs, each blog was then chopped into three parts of equal length (chunks). The same was done for the test set posts. This latter is not strictly necessary, but it seems to be helpful for assessing authorship of all but very short text. The reason is that parts of a text might fool a given classifier, but this is less likely when probability scores from separate parts are then summed on a per-text basis.

Authors with Eight or More Entries

There are, as noted earlier, 10 authors with at least eight blog posts (I am in that set, at the bare minimum not counting this one). Processing for the method in use on this set takes a little over a minute on my desktop machine. After running the classifier, results are that 85.7% of blog chunks are correctly recognized. This is, in my opinion, not bad, though also not great. Aggregating chunk scores for each blog gives a much better result: there are 28 test blog posts (two or three per author), and all 28 are correctly classified as to author.

Authors with Six and Up

Now our set of candidates is larger and our average amount of training data has dropped. The correctness results do drop, although they remain respectable. Around 82.6% of chunks are correctly recognized. For the per‐blog aggregated scores, we correctly recognize 41 of 46, with three more being the second “guess” (that is, the author with the second-highest assessed probability).

Four and Up

Here we start to fare poorly. This is not due to the relatively larger candidate pool (at 41), but more because for many, the amount of training data is just not sufficient. We now get around 60% correct recognition at the chunk level, with 64% of the blog posts (50 out of 78) correctly classified according to summed chunk scores, and another 17% going to the second guess.

So How Do I Look? And Who Wrote Me?

Here we step into what I will call “blog introspection.” I took the initial draft of this blog post, up to but not including this subsection (since this and later parts were not yet written). After running through the same processing (but not breaking into chunks), we obtain an image.

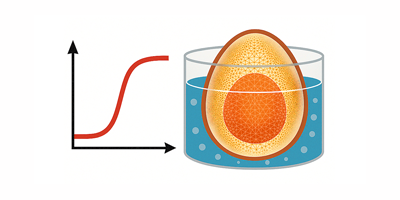

When tested with the first classifier (the one trained on the 10 most prolific authors), the scores for the respective authors are as follows:

![]()

This is fairly typical of the method: several scores are approximately equal at some baseline, with a small number (one, in this case) being somewhat larger. That outlier is the winner. As it happens, that outlier was me, so this blog authorship was correctly attributed.

Final Thoughts

The experiments indicate how a particular method of stylometry handles a data source with a moderate number of authors (which tends to make the problem easier), but also does not have large amounts of training data per author (making the task more difficult). We have seen an expected degradation in performance as the task becomes harder. Results were certainly not bad, even for the most difficult of the experiment variants.

The method described here and, in more detail, in the DFPb (and in published literature), is only one among a sea of literature. While it has outperformed nearly all others on several benchmarks, it is by no means the last word in authorship attribution via stylometry. A possible future direction might involve hybridizing with one or more different methods (wherein some sort of “ensemble” vote determines the classification).

It should be noted that the training and test data are in a certain sense homogeneous: all come from blog posts that are relevant to Wolfram Research. Another open area is whether, or to what extent, training from one area (where there may be an abundance of data for a given set of authors) might apply to test data from different genres. This is of some interest in the world of digital forensics, and might be a topic for another day.

Nice post Daniel! Can you share any results on the accuracies of this method?

Thanks Seth. I’m not sure what you mean by “accuracies” since there are at least a couple of w possible interpretations. (1) Did the code guess a chunk of text correctly? (2) What probability did the highest score receive, and what were other probabilities? The percentages I showed in the post cover (1). For (2), a typical case for the “Eight or More” scenario was along the lines I showed for my own draft: top score is around 25-30%, others all flat-line at the average of 100-topscore. When there are more possible outcomes, the top score tends to drop; that’s one reason this does not extend into the many thousands of authors. (it has done well on a benchmark with 1000 authors, but that’s as far as I was able to take it). I hope to give a bit more detail on this in a later blog, by the way.