Revisiting the Disputed Federalist Papers: Historical Forensics with the Chaos Game Representation and AI

Between October 1787 and April 1788, a series of essays was published under the pseudonym of “Publius.” Altogether, 77 appeared in four New York City periodicals, and a collection containing these and eight more appeared in book form as The Federalist soon after. As of the twentieth century, these are known collectively as The Federalist Papers. The aim of these essays, in brief, was to explain the proposed Constitution and influence the citizens of the day in favor of ratification thereof. The authors were Alexander Hamilton, James Madison and John Jay.

On July 11, 1804, Alexander Hamilton was mortally wounded by Aaron Burr, in a duel beneath the New Jersey Palisades in Weehawken (a town better known in modern times for its tunnels to Manhattan and Alameda). Hamilton died the next day. Soon after, a list he had drafted became public, claiming authorship of more than sixty essays. James Madison publicized his claims to authorship only after his term as president had come to an end, many years after Hamilton’s death. Their lists overlapped, in that essays 49–58 and 62–63 were claimed by both men. Three essays were claimed by each to have been collaborative works, and essays 2–5 and 64 were written by Jay (intervening illness being the cause of the gap). Herein we refer to the 12 claimed by both men as “the disputed essays.”

Debate over this authorship, among historians and others, ensued for well over a century. In 1944 Douglass Adair published “The Authorship of the Disputed Federalist Papers,” wherein he proposed that Madison had been the author of all 12. It was not until 1963, however, that a statistical analysis was performed. In “Inference in an Authorship Problem,” Frederick Mosteller and David Wallace concurred that Madison had indeed been the author of all of them. An excellent account of their work, written much later, is Mosteller’s “Who Wrote the Disputed Federalist Papers, Hamilton or Madison?.” His work on this had its beginnings also in the 1940s, but it was not until the era of “modern” computers that the statistical computations needed could realistically be carried out.

Since that time, numerous analyses have appeared, and most tend to corroborate this finding. Indeed, it has become something of a standard for testing authorship attribution methodologies. I recently had occasion to delve into it myself. Using this technology, developed in the Wolfram Language, I will show results for the disputed essays that are mostly in agreement with this consensus opinion. Not entirely so, however—there is always room for surprise. Brief background: in early 2017 I convinced Catalin Stoean, a coauthor from a different project, to work with me in developing an authorship attribution method based on the Frequency Chaos Game Representation (FGCR) and machine learning. Our paper “Text Documents Encoding through Images for Authorship Attribution” was recently published, and will be presented at SLSP 2018. The method outlined in this blog comes from this recent work.

Stylometry

The idea that rigorous, statistical analysis of text might be brought to bear on determination of authorship goes back at least to Thomas Mendenhall’s “The Characteristic Curves of Composition” in 1887 (earlier work along these lines had been done, but it tended to be less formal in nature). The methods originally used mostly involved comparisons of various statistics, such as frequencies for sentence or word length (that latter in both character and syllable counts), frequency of usage of certain words and the like. Such measures can be used because different authors tend to show distinct characteristics when assessed over many such statistics. The difficulty encountered with the disputed essays was that, by measures then in use, the authors were in agreement to a remarkable extent. More refined measures were needed.

Modern approaches to authorship attribution are collectively known as “stylometry.” Most approaches fall into one or more of the following categories: lexical characteristics (e.g. word frequencies, character attributes such as n-gram frequencies, usage of white space), syntax (e.g. structure of sentences, usage of punctuation) and semantic features (e.g. use of certain uncommon words, relative frequencies of members of synonym families).

Among advantages enjoyed by modern approaches, there is the ready availability on the internet of large corpora, and the increasing availability (and improvement) of powerful machine learning capabilities. In terms of corpora, one can find all manner of texts, newspaper and magazine articles, technical articles and more. As for machine learning, recent breakthroughs in image recognition, speech translation, virtual assistant technology and the like all showcase some of the capabilities in this realm. The past two decades have seen an explosion in the use of machine learning (dating to before that term came into vogue) in the area of authorship attribution.

A typical workflow will involve reading in a corpus, programmatically preprocessing to group by words or sentences, then gathering various statistics. These are converted into a format, such as numeric vectors, that can be used to train a machine learning classifier. One then takes text of known or unknown authorship (for purposes of validation or testing, respectively) and performs similar preprocessing. The resulting vectors are classified by the result of the training step.

We will return to this after a brief foray to describe a method for visualizing DNA sequences.

The Chaos Game Representation

Nearly thirty years ago, H. J. Jeffrey introduced a method of visualizing long DNA sequences in “Chaos Game Representation of Gene Structure.” In brief, one labels the four corners of a square with the four DNA nucleotide bases. Given a sequence of nucleotides, one starts at the center of this square and places a dot halfway from the current spot to the corner labeled with the next nucleotide in the sequence. One continues placing dots in this manner until the end of a sequence of nucleotides is reached. This in effect makes nucleotide strings into instruction sets, akin to punched cards in mechanized looms.

One common computational approach is slightly different. It is convenient to select a level  of pixelation, such that the final result is a rasterized

of pixelation, such that the final result is a rasterized  image. The actual details go by the name of the Frequency Chaos Game Representation, or FCGR for short. In brief, a square image space is divided into discrete boxes. The gray level in the resulting image of each such pixelized box is based on how many points from chaos game representation (CGR) land in it.

image. The actual details go by the name of the Frequency Chaos Game Representation, or FCGR for short. In brief, a square image space is divided into discrete boxes. The gray level in the resulting image of each such pixelized box is based on how many points from chaos game representation (CGR) land in it.

Following are images thus created from nucleotide sequences of six different species (cribbed from the author’s “Linking Fourier and PCA Methods for Image Look‐Up”). This has also appeared on Wolfram Community.

It turns out that such images do not tend to vary much from others created from the same nucleotide sequence. For example, the previous images were created from the initial subsequences of length 150,000 from their respective chromosomes. Corresponding images from the final subsequences of corresponding chromosomes are shown here:

As is noted in the referenced article, dimension-reduction methods can now be used on such images, for the purpose of creating a “nearest image” lookup capability. This can be useful, say, for quick identification of the approximate biological family a given nucleotide sequence belongs to. More refined methods can then be brought to bear to obtain a full classification. (It is not known whether image lookup based on FCGR images is alone sufficient for full identification—to the best of my knowledge, it has not been attempted on large sets containing closer neighbor species than the six shown in this section). It perhaps should go without saying (but I’ll note anyway) that even without any processing, the Wolfram Language function Nearest will readily determine which images from the second set correspond to similar images from the first.

FCGR on Text

A key aspect to CGR is that it uses an alphabet of length four. This is responsible for a certain fractal effect in that blocks from each quadrant tend to be approximately repeated in nested subblocks in corresponding nested subquadrants. In order to obtain an alphabet of length four, it was convenient to use multiple digits from a power of four. Some experiments indicated that an alphabet of length 16 would work well. Since there are 26 characters in the English version of the Latin alphabet, as well as punctuation, numeric characters, white space and more, some amount of merging was done, with the general idea that “similar” characters could go into the same overall class. For example, we have one class comprised of {c,k,q,x,z}, another of {b,d,p} and so on. This brought the modified alphabet to 16 characters. Written in base 4, the 16 possibilities give all possible pairs of digits in base 4. The string of base 4 digits thus produced is then used to produce an image from text.

For relatively short texts, up to a few thousand characters, say, we simply create one image. Longer texts we break into chunks of some specified size (typically in the range of 2,000–10,000 characters) and make an image for each such chunk. Using ExampleData["Text"] from the Wolfram Language, we show the result for the first and last chunks from Alice in Wonderland and Pride and Prejudice, respectively:

While there is not so much for the human eye to discern between these pairs, machine learning does quite well in this area.

Authorship Attribution Using FCGR

The paper with Stoean provides details for a methodology that has proven to be best from among variations we have tried. We use it to create one-dimensional vectors from the two-dimensional image arrays; use a common dimension reduction via the singular-value decomposition to make the sizes manageable; and feed the training data, thus vectorized, into a simple neural network. The result is a classifier that can then be applied to images from text of unknown authorship.

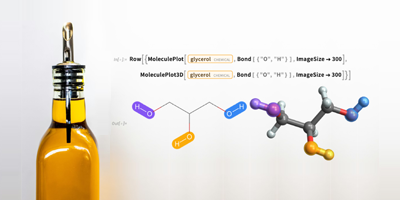

While there are several moving parts, so to speak, the breadth of the Wolfram Language make this actually fairly straightforward. The main tools are indicated as follows:

1. Import to read in data.

2. StringDrop, StringReplace and similar string manipulation functions, used for removing initial sections (as they often contain identifying information) and to do other basic preprocessing.

3. Simple replacement rules to go from text to base 4 strings.

4. Simple code to implement FCGR, such as can be found in the Community forum.

5. Dimension reduction using SingularValueDecomposition. Code for this is straightforward, and one version can be found in “Linking Fourier and PCA Methods for Image Look‐Up.”

6. Machine learning functionality, at a fairly basic level (which is the limit of what I can handle). The functions I use are NetChain and NetTrain, and both work with a simple neural net.

7. Basic statistics functions such as Total, Sort and Tally are useful for assessing results.

Common practice in this area is to show results of a methodology on one or more sets of standard benchmarks. We used three such sets in the referenced paper. Two come from Reuters articles in the realm of corporate/industrial news. One is known as Reuters_50_50 (also called CCAT50). It has fifty authors represented, each with 50 articles for training and 50 for testing. Another is a subset of this, comprised of 50 training and 50 testing articles from ten of the fifty authors. One might think that using both sets entails a certain level of redundancy, but, perhaps surprisingly, past methods that perform very well on either of these tend not to do quite so well on the other. We also used a more recent set of articles, this time in Portuguese, from Brazilian newspapers. The only change to the methodology that this necessitated involved character substitutions to handle e.g. the “c‐with‐cedilla” character ç.

Results of this approach were quite strong. As best we could find in prior literature, scores equaled or exceeded past top scores on all three datasets. Since that time, we have applied the method to two other commonly used examples. One is a corpus comprised of IMDb reviews from 62 prolific reviewers. This time we were not the top performer, but came in close behind two other methods. Each was actually a “hybrid” comprised of weighted scores from some submethods. (Anecdotally, our method seems to make different mistakes from others, at least in examples we have investigated closely. This makes it a sound candidate for adoption in hybridized approaches.) As for the other new test, well, that takes us to the next section.

The Federalist Papers

We now return to The Federalist Papers. The first step, of course, is to convert the text to images. We show a few here, created from first and last chunks from two essays. The ones on the top are from Federalist No. 33 (Hamilton) while those on the bottom are from Federalist No. 44 (Madison). Not surprisingly, they are not different in the obvious ways that the genome‐based images were different:

Before attempting to classify the disputed essays, it is important to ascertain that the methodology is sound. This requires a validation step. We proceeded as follows: We begin with those essays known to have been written by either Hamilton or Madison (we discard the three they coauthored, because there is not sufficient data therein to use). We hold back three entire essays from those written by Madison, and eight from the set by Hamilton (this is in approximate proportion to the relative number each penned). These withheld essays will be our first validation set. We also withhold the final chunk from each of the 54 essays that remain, to be used as a second validation set. (This two‐pronged validation appears to be more than is used elsewhere in the literature. We like to think we have been diligent.)

The results for the first validation set are perfect. Every one of the 70 chunks from the withheld essays are ascribed to their correct author. For the second set, two were erroneously ascribed. The scores for most chunks have the winner around four to seven times higher than the loser. For the two that were mistaken, these ratios dropped considerably, in one case to a factor of three and in the other to around 1.5. Overall, even with the two misses, these are extremely good results as compared to methods reported in past literature. I will remark that all processing, from importing the essays through classifying all chunks, takes less than half a minute on my desktop machine (with the bulk of that occupied in multiple training runs of the neural network classifier).

In order to avail ourselves of the full corpus of training data, we next merge the validation chunks into the training set and retrain. When we run the classifier on chunks from the disputed essays, things are mostly in accordance with prior conclusions. Except…

The first ten essays go strongly to Madison. Indeed, every chunk therein is ascribed to him. The last two go to Hamilton, albeit far less convincingly. A typical aggregated score for one of the convincing outcomes might be approximately 35:5 favoring Madison, whereas for the last two that go to Hamilton the scores are 34:16 and 42:27, respectively. A look at the chunk level suggests a perhaps more interesting interpretation. Essay 62, the next‐to‐last, has the five-chunk score pairs shown here (first is Hamilton’s score, then Madison’s):

Three are fairly strongly in favor of Hamilton as author (one of which could be classified as overwhelmingly so). The second and fourth are quite close, suggesting that despite the ability to do solid validation, these might be too close to call (or might be written by one and edited by the other).

The results from the final disputed essay are even more stark:

The first four chunks go strongly to Hamilton. The next two go strongly to Madison. The last also favors Madison, albeit weakly. This would suggest again a collaborative effort, with Hamilton writing the first part, Madison roughly a third and perhaps both working on the final paragraphs.

The reader will be reminded that this result comes from but one method. In its favor is that it performs extremely well on established benchmarks, and also in the validation step for the corpus at hand. On the counter side, many other approaches, over a span of decades, all point to a different outcome. That stated, we can mention that most (or perhaps all) prior work has not been at the level of chunks, and that granularity can give a better outcome in cases where different authors work on different sections. While these discrepancies with established consensus are of course not definitive, they might serve to prod new work on this very old topic. At the least, other methods might be deployed at the granularity of the chunk level we used (or similar, perhaps based on paragraphs), to see if parts of those essays 62 and 63 then show indications of Hamilton authorship.

Dedication

To two daughters of Weehawken. My wonderful mother‐in‐law, Marie Wynne, was a library clerk during her working years. My cousin Sharon Perlman (1953–2016) was a physician and advocate for children, highly regarded by peers and patients in her field of pediatric nephrology. Her memory is a blessing.

Comments