How to Win at Rock-Paper-Scissors

Rock-paper-scissors* isn’t obviously interesting to look at mathematically. The Nash-equilibrium strategy is very simple: choose equally and randomly from the three choices, and (in the long run) your opponent will not beat you (nor will you beat your opponent). Nevertheless, it’s still possible for a computer strategy to beat a human player over a long run of games.

My nine-year-old daughter showed me one solution with a Scratch program that she wrote that won every time by looking at your choice before making its decision! But I will walk you through a simple solution that wins without cheating.

I thought about writing the algorithm as a topic within our Computer-Based Math™ statistics course. But the first paper I looked up on predictive rock-paper-scissors algorithms solved the problem with some complex copula distribution. It was too complex to explain to school kids (and possibly for me), so I decided to create a simpler solution that I could explain. And, even though it’s almost certainly been done before, it’s more fun to re-invent things than to look them up!

First we need to be able to play the game. There was already a Demonstration available, but it wasn’t quite what I needed, so I wrote my own. It doesn’t need much explanation:

Random player

The code is mostly user interface, display, and game rules. The entire computer strategy is held in the function

![]()

where 1 represents rock, 2 paper, and 3 scissors. This is the optimal solution; however you play, you should win a similar number of games as the computer, and your win rate will jitter around zero.

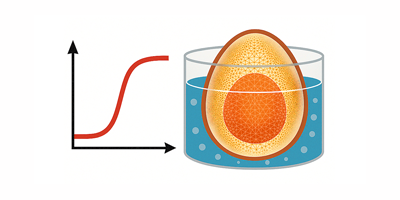

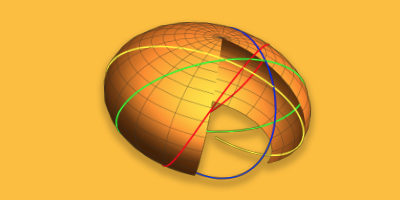

So the interesting task now is to re-write that chooseGo function to make a better prediction by using the data held in the history variable about past games. Our first step is to look at the choices made in the last few games and seek out all the times in our history where that sequence has come up before. By looking at what the human did next after each game, we may find a pattern.

The first argument of the function provides the past history of plays. For example, in the dataset below, the computer (second column) has just played Paper (2) to the human’s Rock (1). The last element represents this. We can see that this has happened twice before, and each time the human’s next move was Rock again.

The second argument is the history length to seek back. In this case, 1 looks only for cases of

{1, 2} in the data; if we chose 2, it would seek cases of {3, 2} followed by {1, 2} in the data and find no matches, since this sequence has not previously occurred.

The third argument, All, states that both the computer’s and human’s move histories must match. The argument can be changed to 1 to only look at the human’s history (i.e., assuming that the human is only influenced by what they did before), or 2, to look only at column 2, which is the computer’s history (i.e., assuming that the human mostly responds to what the computer did before, regardless of what they did, and hence whether they won or lost).

For example, in this case we find what the human typically chose Rock after having previously chosen Rock, regardless of what the computer did each time.

With enough data, the All option is all that we need, and it will decide for itself whether the human or computer history is more important. For example, if the computer history was ignored by the human, then the dataset selected by any computer play history would have the same distribution as any other computer play history (given enough data). By looking at the history of all pairs of plays, this is the same as first selecting data on the (irrelevant) computer history, and then using this data subset for the function above. Likewise if only the computer history mattered. However, by looking at these two special assumptions separately, we get more valid history matches, and this matters when the datasets are initially small.

So from these two tests, we can see that the first gives a best estimate that there is a 100% chance of the human player choosing Rock next. And the second test makes it 75%, with a 25% chance of Scissors.

And here is where I got stuck!

In this case, the two predictions agree on outcome, if not on the probability. But when you can search three slices of the data, with a range of different history lengths, and they don’t agree, how do you combine the predictions?

I put it in my “blog items to write” folder and forgot about it until a few weeks later, when we debated how to cover the concept of “significance” for the Computer-Based Math™ course.

I realized that the question wasn’t necessarily “How do I combine the predictions?” It could be looked at as “Which prediction is most significant?” One prediction might have been more significant than another because it showed a larger bias in the data, or because it was supported by a larger dataset. I didn’t care, and I just used the p-value of the significance test (with null hypothesis that the player is playing randomly) to order my predictions.

I guess I should have listened to our own argument that the first step of math is “posing the right question!”

Now for example, taking my last result, I find the best prediction is 1 (Rock) with a p-value of 0.17. That is, there is a only a 17% probability that the data used for this prediction deviated from DiscreteUniformDistribution[{1,3}] purely by chance (rather than because of human bias or some other explanation that has changed the distribution).

![]()

The smaller this p-value, the more confident we can be that we have found a real pattern. So we just do this for every permutation of history length and data slice and choose the prediction with the smallest p-value:

And choose the next move that would beat this prediction:

![]()

Here it is all put together. You can get it from the Wolfram Demonstrations site at demonstrations.wolfram.com/RockPaperScissorsWithAIPlayer.

Intelligent player

When it has too little data, it will choose randomly, so you should start fairly equal. Initially as it starts to learn, it makes some dumb choices, and you may take an early lead. But by the time you have played 30–40 games, it will have started to make useful predictions, and you should see your win rate dip into negative territory and stay there.

Of course this solution is only good against a flawed attempt to be random. Its predictability makes it fatally exposed to a targeted strategy, and it’s interesting to try to do that by intuition. It can be done, but if you stop thinking, or over-think, you lose ground quickly. Of course, a program could do that easily by applying the same algorithm in order to predict with certainty my program’s move.

Such an approach leads to an arms race of writing algorithms that beat the opponent’s current algorithm, and the only way out is to return to the Nash-equilibrium strategy of RandomInteger[{1,3}].

* In case you don’t know the game, the rules are these: you choose rock, paper, or scissors using the hand gestures shown above at the same time as your opponent. Rock beats scissors (it makes them blunt), scissors beats paper (it cuts it), paper beats rock (it wraps it–yeah, I never found that explanation satisfying!). Score a point for a win, none for a draw, and repeat until you get bored.

Download this post as a Computable Document Format (CDF) file.

In reference to the last paragraph, this sounds like a perfect use case for a neural network. Don’t write one algorithm to beat one opponent, write an algorithm that adjusts to any opposing algorithm.

I tried this with 30 games, completely based on my whim. Mathematica won 16 out of 30 – not too impressive, honestly. I mean I’m sure I have some sort of tendencies that could’ve been exploited, and I did not pay much attention to my own histories.

As I said in the article “by the time you have played 30–40 games, it will have *started* to make useful predictions”. But there is almost nothing useful before this. If you look at the available data for say your 2-go history or the whole game 1-go history, there are 9 possible combinations. That means that after 27 games there are only 3 data points for each possibility. If you play at all evenly, then you will have about 1 of each possible play after those events – not enough to infer anything useful unless you have a very clear pattern. So at 30 games it is only really starting to look at single player 1-go history. Play on to 100 games and see what happens.

Nice! This reminds me of a variation of the game that I played with my kids when they were still very young. It has a twist about which you really have to think about for a few seconds. We played the game with the three of us. There are only two hand gestures, palm up or down, and the goal of the game is to be the odd man out. We played this for a couple of rounds and then I announced that the next round I would play “palm down” (and, of course, that I wasn’t lying about this). Great was their initial joy thinking they would now beat their father for sure, until each of the boys realized what using this information meant, given that his brother had the same information. Think about this, and I hope you see and enjoy the amazing twist. How would you use this information?

This can be an index for the weakness of our own random number generator, mine it’s horrible: 60-42!!!

For me the interesting part of this is that the “financial modelers” do exactly the same with the financial market, but there there are only two choices and only a column!!! :D

note “For example, in this case we find what the human typically chose [[[missing:scissor]]]] after having previously chosen Rock, regardless of what the computer did each time.”

So should that program have learnt from me? Because I reached a 75% win ratio on both just by clicking haphazardly.

They should do this next. Make it even more complex

Sheldon would definitely beat the Wolfram Language program (as long as touching is prohibited).