Deploy a Neural Network to Your iOS Device Using the Wolfram Language

Today’s handheld devices are powerful enough to run neural networks locally without the need for a cloud server connection, which can be a great convenience when you’re on the go. Deploying and running a custom neural network on your phone or tablet is not straightforward, though, and the process depends on the operating system of the machine. In this post, I will focus on iOS devices and walk you through all the necessary steps to train a custom image classifier neural network model using the Wolfram Language, export it through ONNX (new in Version 12.2), convert it to Core ML (Apple’s machine learning framework for iOS apps) and finally deploy it to your iPhone or iPad.

Before we get started, an important warning: Do NOT use this classifier for cooking without expert consultation. Toxic mushrooms can be deadly!

Creating Training and Testing Data

Mushroom season is still a few months away in the Northern Hemisphere, but it would be great to have a mushroom image classifier running locally on your phone in order to identify mushrooms while hiking. To build such an image classifier, we will need a good training set that includes dozens of images for each mushroom species.

As an example, I’ll start with getting the species entity for a mushroom, the fly agaric (Amanita muscaria), which even has its own emoji: ![]() .

.

Engage with the code in this post by downloading the Wolfram Notebook

Engage with the code in this post by downloading the Wolfram Notebook

✕

Interpreter["Species"]["Amanita muscaria"] |

We can also obtain a thumbnail image to get a better picture of what we are talking about:

✕

Entity["Species", "Species:AmanitaMuscaria"]["Image"] |

Thankfully, the iNaturalist community of citizen scientists has recorded hundreds of field observations for all kinds of mushroom species. Using the INaturalistSearch function from the Wolfram Function Repository, we can find images for each species. The INaturalistSearch function retrieves observation data via iNaturalist’s API. We first need to get the INaturalistSearch function using ResourceFunction:

✕

ResourceFunction["INaturalistSearch"] |

Next, we will specify the species. Then we will ask for observations with attached photos using the "HasImage" option and observations that have been validated by others using the "QualityGrade" option and "Research" property:

✕

muscaria = ResourceFunction[

ResourceObject[

Association[

"Name" -> "INaturalistSearch",

"ShortName" -> "INaturalistSearch",

"UUID" -> "52cc24aa-c5a6-47ca-998f-3e5c08b4ed53",

"ResourceType" -> "Function", "Version" -> "1.0.0",

"Description" -> "Search for iNaturalist observations using the \

iNaturalist API",

"RepositoryLocation" -> URL[

"https://www.wolframcloud.com/objects/resourcesystem/api/1.0"],

"SymbolName" -> "FunctionRepository`$\

d5b9fb4680a7411e9344c42a8625a99d`INaturalistSearch",

"FunctionLocation" -> CloudObject[

"https://www.wolframcloud.com/obj/1181e589-ac03-4b62-b411-\

3a30b643b8bf"]], ResourceSystemBase -> Automatic]][

Entity["Species", "Species:AmanitaMuscaria"], "HasImage" -> True,

"QualityGrade" -> "Research", MaxItems -> 50,

"ObservationGeoRange" ->

GeoBounds[Entity["GeographicRegion", "Europe"]] ];

|

For example, the data from the first observation is the following:

✕

First@muscaria |

We can easily import the images using the "ImageURL" property:

✕

Import[First[muscaria]["ImageURL"]] |

Nice! As a starting point, I’m interested in getting images of the most common species of both toxic and edible mushrooms that can be found in my region (Catalonia).

✕

toxicMushrooms =

Interpreter["Species"][{"Amanita phalloides", "Amanita muscaria",

"Amanita pantherina", "Gyromitra gigas", "Galerina marginata",

"Paxillus involutus"}]

|

✕

edibleMushrooms =

Interpreter["Species"][{"Boletus edulis", "Boletus aereus",

"Suillus granulatus", "Lycoperdon perlatum",

"Lactarius deliciosus", "Amanita caesarea", "Macrolepiota procera",

"Russula delica", "Cantharellus aurora", "Cantharellus cibarius",

"Chroogomphus rutilus"}]

|

Let’s create a few custom functions to get the imageURLs; import and rename the images; and finally, export them into a folder for later use:

✕

imageURLs[species_] := Normal[ResourceFunction[

ResourceObject[

Association[

"Name" -> "INaturalistSearch",

"ShortName" -> "INaturalistSearch",

"UUID" -> "52cc24aa-c5a6-47ca-998f-3e5c08b4ed53",

"ResourceType" -> "Function", "Version" -> "1.0.0",

"Description" -> "Search for iNaturalist observations using \

the iNaturalist API",

"RepositoryLocation" -> URL[

"https://www.wolframcloud.com/objects/resourcesystem/api/1.0"]\

, "SymbolName" -> "FunctionRepository`$\

d5b9fb4680a7411e9344c42a8625a99d`INaturalistSearch",

"FunctionLocation" -> CloudObject[

"https://www.wolframcloud.com/obj/1181e589-ac03-4b62-b411-\

3a30b643b8bf"]], ResourceSystemBase -> Automatic]][ species ,

"HasImage" -> True, "QualityGrade" -> "Research", MaxItems -> 50 ,

"ObservationGeoRange" ->

GeoBounds[Entity["GeographicRegion", "Europe"]]][All,

"ImageURL"]]

|

✕

imagesDirectory =

FileNameJoin@{$HomeDirectory, "DeployNeuralNetToYouriPhone",

"Images"};

|

✕

imagesExport[species_, tag_String] :=

MapIndexed[

Export[imagesDirectory <> "/" <>

StringReplace[species["ScientificName"], " " -> "-"] <> "-" <>

tag <> "-" <> ToString[First[#2]] <> ".png", #1] &,

Map[Import, imageURLs[species]]]

|

We can test the function using another toxic species, the death cap (Amanita phalloides):

|

✕

imagesExport[ Entity["Species", "Species:AmanitaPhalloides"], "toxic"]; |

We can import a few death cap images from our local folder and check that they look OK:

✕

Table[Import[

imagesDirectory <> "Amanita-phalloides-toxic-" <> ToString[i] <>

".png"], {i, 5}]

|

Now we can do the same for the other mushroom species:

|

✕

Map[imagesExport[ #, "edible"] &, edibleMushrooms]; |

|

✕

Map[imagesExport[ #, "toxic"] &, Rest[toxicMushrooms]]; |

In order to create the training and test sets, we need to specify the classLabels:

✕

classLabels = {"Amanita-phalloides-toxic", "Amanita-muscaria-toxic",

"Amanita-pantherina-toxic", "Gyromitra-gigas-toxic",

"Galerina-marginata-toxic", "Paxillus-involutus-toxic",

"Boletus-edulis-edible", "Boletus-aereus-edible",

"Suillus-granulatus-edible", "Lycoperdon-perlatum-edible",

"Lactarius-deliciosus-edible", "Amanita-caesarea-edible",

"Macrolepiota-procera-edible", "Russula-delica-edible",

"Cantharellus-aurora-edible", "Cantharellus-cibarius-edible",

"Chroogomphus-rutilus-edible"};

|

Next we need to import the images and create examples as follows:

✕

all = Flatten@

Map[Thread[

Table[Import[

imagesDirectory <> "/" <> # <> "-" <> ToString[i] <>

".png"], {i, 50}] -> #] &, classLabels];

|

We have a total of 850 images/examples: 50 for each of the 17 mushroom species:

✕

Length@all |

Let’s check the first example:

✕

First[all] |

Looks good! Finally, we only need to create randomized training and testing sets:

|

✕

{trainSet, testSet} = TakeDrop[RandomSample[all], 750];

|

Here I should note that part of trainSet will actually be used as a validation set via the NetTrain option ValidationSet.

Training the Neural Network

Starting from a pretrained model, we can make use of net surgery functions to create our own custom mushroom image classification network. First, we need to obtain a pretrained model from the Wolfram Neural Net Repository. Here we will use Wolfram ImageIdentify Net V1:

✕

pretrainedNet = NetModel["Wolfram ImageIdentify Net V1"] |

We can check whether the size of the network is reasonably small for current smartphones. As a rule, it shouldn’t surpass 100 MB:

✕

ByteCount@pretrainedNet |

As there are one million bytes in a megabyte, our size of about 60 MB clears the limit.

We take the convolutional part of the net using NetTake:

✕

subNet = NetTake[pretrainedNet, {"conv_1", "global_pool"}]

|

Then we add a new classification layer using NetJoin and attach a new NetDecoder:

✕

joinedNet =

NetJoin[subNet,

NetChain@<|"linear17" -> LinearLayer[17], "prob" -> SoftmaxLayer[]|>,

"Output" -> NetDecoder[{"Class", classLabels}]]

|

Finally, we train the net, keeping the pretrained weights fixed:

✕

trainedNet =

NetTrain[joinedNet, trainSet, All,

LearningRateMultipliers -> {"linear17" -> 1, _ -> 0},

ValidationSet -> Scaled[0.1],

MaxTrainingRounds -> 15,

Method -> "SGD"]

|

We can check the resulting net performance by measuring the accuracy and plotting the confusion matrix plot for the test set (using either NetMeasurements or ClassifierMeasurements):

|

✕

cm = ClassifierMeasurements[trainedNet["TrainedNet"], testSet]; |

✕

cm["Accuracy"] |

✕

cm["ConfusionMatrixPlot"] |

We can also check the best- and worst-classified examples. These are the test examples with the highest and lowest probabilities, respectively, on the actual class:

|

✕

cm["BestClassifiedExamples" -> 5] |

✕

|

|

✕

cm["WorstClassifiedExamples" -> 5] |

✕

|

Taking a quick glimpse of the worst-classified examples, we can see mushrooms with imperfect views and conditions. For example, one is covered with leaves and another appears to be in an advanced stage of decomposition.

We can test the classifier using a photo from an iNaturalist user observation:

✕

testSet[[1]] |

✕

trainedNet["TrainedNet"][ CloudGet["https://wolfr.am/SmLMk59B"], "TopProbabilities"] |

It’s a good practice to save our trained model so that if we restart the session, we won’t need to retrain the net:

✕

Export["MushroomsWolframNet.wlnet", trainedNet["TrainedNet"]] |

Exporting the Neural Network through ONNX

As an intermediate step, we will need to export our trained model as an ONNX file. ONNX is an open exchange format file framework built to represent machine learning models and to enable AI developers to use models with a variety of frameworks. Later on, this will allow us to convert our custom model into Core ML format (.mlmodel).

To obtain the ONNX model from our trained model, we simply need to use Export:

✕

mushroomsWLNet = Import["MushroomsWolframNet.wlnet"] |

✕

Export["MushroomsWolframNet.onnx", mushroomsWLNet] |

Converting the Neural Network to Core ML

In this section, we will make extensive use of a Python package called coremltools, which Apple freely provides in order to convert external neural net models to Core ML. Core ML is the Apple framework to integrate machine learning models into iOS apps.

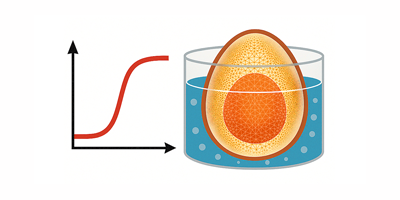

Graphic from coremltools.

In order to configure your system to evaluate external code, I recommend you follow this workflow.

Once Python has been configured for ExternalEvaluate, we need to register it as an external evaluator and start an external session:

✕

RegisterExternalEvaluator["Python", "/opt/anaconda3/bin/python"] |

✕

session = StartExternalSession[<|"System" -> "Python", "Version" -> "3.8.3", "Executable" -> "/opt/anaconda3/bin/python"|>] |

For converting an ONNX model into Core ML, we need to install two extra packages using the terminal:

![]()

2. onnx package:

![]()

Core ML models (.mlmodel) work similarly to Wolfram Language models. They also include an encoder and a decoder for the model. So while converting the ONNX model to Core ML, we need to specify the image encoder (preprocessing arguments) and the decoder (the class labels).

If we click the Input port from our original Wolfram Language model, we will see the following panel:

✕

mushroomsWLNet |

During the conversion, we will need to specify that the input type is an image and include the mean image values for each color channel as biases. Furthermore, we will need to specify an image rescale factor since the original model pixel values range from 0 to 1 and the Core ML values range from 0 to 255.

coremltools allows us to specify the class labels of the model using a text file containing each class label in a new line. It is straightforward to export such a text file using Export and StringRiffle:

✕

Export["MushroomClassLabels.txt", StringRiffle[classLabels, "\n"]] |

The following code consists of three parts: (1) importing the coremltools package and specifying the path to the ONNX model; (2) the code for converting the model; and (3) the code for saving the resulting Core ML model:

✕

ExternalEvaluate[session, "import coremltools as ct

# Specify path to ONNX model

onnx_model_path = \

'/Users/jofre/ownCloud/DeployNeuralNetToYouriPhone/\

MushroomsWolframNet.onnx'

# Convert from ONNX to Core ML

mlmodel = ct.converters.onnx.convert(onnx_model_path,

image_input_names = ['Input'],

mode = 'classifier',

preprocessing_args = {

'image_scale': 1.0 / 255.0,

'red_bias': -0.48,

'green_bias': -0.46,

'blue_bias': -0.4 },

class_labels = \

'MushroomClassLabels.txt',

minimum_ios_deployment_target = \

'13')

# Save the mlmodel

mlmodel.save('/Users/jofre/ownCloud/DeployNeuralNetToYouriPhone/\

MushroomsWolframNet.mlmodel')"]

|

We can directly check whether the converted model is working properly using the coremltools, NumPy and PIL packages:

✕

ExternalEvaluate[session, "import coremltools

model = coremltools.models.MLModel('MushroomsWolframNet.mlmodel')

import numpy as np

import PIL

img = PIL.Image.open('/Users/jofre/ownCloud/\

DeployNeuralNetToYouriPhone/ParasolTest.png')

spec = model._spec

img_width = spec.description.input[0].type.imageType.width

img_height = spec.description.input[0].type.imageType.height

img = img.resize((img_width, img_height), PIL.Image.BILINEAR)

y = model.predict({'Input': img}, usesCPUOnly=True)

def printTop3(resultsDict):

# Put probabilities and labels into their own lists.

probs = np.array(list(resultsDict.values()))

labels = list(resultsDict.keys())

# Find the indices of the 3 classes with the highest probabilities.

top3Probs = probs.argsort()[-3:][::-1]

# Find the corresponding labels and probabilities.

top3Results = map(lambda x: (labels[x], probs[x]), top3Probs)

# Print them from high to low.

for label, prob in top3Results:

print('%.5f %s' % (prob, label))

printTop3(y['Output'])"]

|

Comparing the results with the original Wolfram Language net model, we can see that the top probability is almost the same, and the differences are of the order 10–2:

✕

mushroomsWLNet[ CloudGet["https://wolfr.am/SmLMk59B"], "TopProbabilities"] |

Deploying the Neural Network to iOS

Finally, we only need to integrate our Core ML model into an iOS app and install it on our iPhones. For this, I will need to register as an Apple developer to download and install Xcode beta. (Note that knowing the Swift programming language won’t be necessary.)

First, we need to download the Xcode project for classifying images with Vision and Core ML that Apple provides as a tutorial. When I open the project called “Vision+ML Example.xcodeproj” with Xcode beta, I see the following window:

Once I drop/upload the model inside the Xcode project, I will see the following window for the model. The preview section allows us to test the model directly using Xcode:

Finally, we need to replace the Mobilenet Core ML classifier model for our MushroomsWolframNet model in the ImageClassificationViewController Swift file and then press the Build and then run button on the top left:

Before deploying the model, we need to sign the development team of the app:

We did it! Now we just need to go hiking in a nearby forest and hope to find some mushrooms.

Here are a few examples from my latest hikes, all correctly identified:

You can also see a live test here.

Try It Yourself

Create your own custom neural network models using the Wolfram Language and export them through ONNX. Deploy and run your models locally on mobile devices and post your applications in the comments or share them on Wolfram Community. Questions or suggestions for additional functionality are also welcome in the comments below.

Additional Resources

For more on the topics mentioned in this post, visit:

- Wolfram U class: Exploring the Neural Net Framework from Building to Training

- Wolfram Neural Net Repository

- Wolfram Function Repository

- “Accessing Monarch Biodiversity Data with New Wolfram Language Functions”

| Get full access to the latest Wolfram Language functionality with a Mathematica 12.2 or Wolfram|One trial. |

Really great post. We need more of those!