Developing a New Data Analysis and Visualization Course: Tackling an Infodemic with Computation

A few months before I accepted a Wolfram Research internship—around March—I was very fearful, and so was the majority of the world. We knew very little about the novel coronavirus, and the data was just not robust. In addition to the limited data we had, the scientific process necessarily takes time, so even that was not used to its full extent. In a world where not enough data can quickly become data overload, the question didn’t seem to be finding more data, but rather how can one extract useful and meaningful information from the available data?

A worldwide pandemic is definitely stressful, but a worldwide pandemic without accessible and computable information is much more so. Using Wolfram technologies in coordination with several internal teams, I created a Wolfram U course called COVID-19 Data Analysis and Visualization to try and cut through the informational fog and find some clarity. I saw this course as one that gives power to everyone to be able to look at data and gain insight. After all, data is knowledge, and knowledge is power.

By May, we started getting a lot more information about the pandemic, perhaps even bordering on an “infodemic”—a pandemic of incomplete and sometimes outright inaccurate information. Thankfully, I had computational thinking on my side. Since I had attended the Wolfram High School Summer Camp back in 2019, I began seeing the world through an ever-more-computational lens. And that’s exactly what I did with this challenge. I took to my notebooks and began trying to analyze data for fun, attempting to see the validity of the claims I was hearing from everywhere, context included.

I was happy to see so many datasets in the Wolfram Data Repository about COVID-19. I suddenly had easy access to everything from case numbers to the genetic sequences of the SARS-CoV-2 virus that is responsible for COVID-19. As data was being collected in a global effort, computational explorers like myself began digging into what was available. Around the same time, in June, I ended up applying and being accepted as an intern at Wolfram! My intern project was to let others into the process that I and many others were already involved in—to transform a Wolfram Language beginner into someone who is well-versed enough to explore data the way I was via a short video course. My first task was, of course, learning everything I could. I first visited the Wolfram COVID-19 resources page to learn more, and I was pleasantly surprised by the plentiful resources. I took on studying everything available to me, from three-hour-long livestreams to technical computational essays.

I started my internship on June 29. This was the same day I had arrived in the USA six years ago—a good sign indeed. Getting into the flow of curating content, recording videos and editing them was definitely a challenge. I stuttered a whole lot in the beginning, was not too confident in my content and was in all sorts of ways a beginner. But slowly, with the guidance of my manager Mads Bahrami, I got into a consistent workflow. I suddenly began churning out more than one video a day, meeting with topic experts and discussing content with them and my manager.

With the enormous curated data available in the Wolfram Language, it is very straightforward to computationally explore the potential, if any, effect/correlation of different factors (income, age, weather, etc.) with COVID-19. Following are two examples.

Engage with the code in this post by downloading the Wolfram Notebook

Engage with the code in this post by downloading the Wolfram Notebook

In the top 20 US counties in terms of confirmed COVID-19 cases, a slight positive correlation is found between per-capita income and COVID-19 death rate:

✕

Module[{list = {#["County"]["PerCapitaIncome"],

N@10000 #["Deaths"]["LastValue"]/#["County"][

"Population"]} -> #["County"] & /@

ResourceData["Epidemic Data for Novel Coronavirus COVID-19",

"USCounties"][

TakeLargestBy[#ConfirmedCases["LastValue"] &, 20], {"County",

"ConfirmedCases", "Deaths"}], fit},

fit = LinearModelFit[QuantityMagnitude /@ (list // Keys // Normal),

x, x];

Show[

ListPlot[list, Sequence[

PlotLabel -> "COVID-19 deaths and average income",

AxesLabel -> {

"Per capita income", "COVID-19 death rate by 10,000 people"},

LabelingFunction -> Tooltip], ImageSize -> Large],

Plot[fit[x],

Sequence[{x, 25000, 60000}, PlotStyle -> Red,

ImageSize -> Large]]]]

|

A slight correlation is found between median age and COVID-19 death rate:

✕

Module[{list =

ResourceData["Epidemic Data for Novel Coronavirus COVID-19",

"USStates"][

All, {#AdministrativeDivision["MedianAge"],

N[10000. #Deaths["LastValue"]/#AdministrativeDivision[

"Population"]]} -> #AdministrativeDivision &], fit},

fit = LinearModelFit[QuantityMagnitude /@ (list // Keys // Normal),

x, x];

Show[ListPlot[list, Sequence[

PlotRange -> All, PlotLabel -> "COVID-19 deaths and median age",

AxesLabel -> {"Median age", "Deaths per 10,000 people"},

LabelingFunction -> Tooltip, ImageSize -> Large]],

Plot[fit[x], Sequence[{x, 30, 45}, PlotStyle -> Red]]]]

|

Of course, these are very primitive analyses, and one can implement more sophisticated explorations.

Along the way, I also got to learn about a whole new field: genomics. As part of the course, I was to develop a video explaining the (very large) dataset concerning genetic sequences of the SARS-CoV-2 virus. I knew little about genomics at the start: all I remembered was some ninth-grade biology lesson about DNA sequences. But I now had to dig through the web to try to wrap my head around phylogenetic trees, how to interpret them and finally, how to code them. Luckily, the hardest part of the job was done for me! The Wolfram Function Repository already had a PhylogeneticTreePlot function, which I utilized to get a very neat-looking phylogenetic tree.

The phylogenetic tree shows the evolution of the virus geographically. While large animal species, like humans and giraffes, evolve very slowly, viruses can evolve extremely fast. Phylogenetic trees can help us visualize this evolution in genetic material. By looking at genetic sequences of the virus taken at different locations, we can see how this evolution is occurring across geography through a phylogenetic tree.

By looking at this phylogenetic tree, we can deduce, for example, that Japan and Belize have SARS-CoV-2 viruses that have very similar genetic sequences, while Japan and Malaysia are less similar, even though Malaysia is geographically much closer to Japan. This clues us in on important insights behind the evolution of the SARS-CoV-2 virus:

✕

ResourceFunction["PhylogeneticTreePlot"] @@

Transpose[{StringReplace[First[#],

StartOfString ~~ Shortest[a__] ~~ ("A" ..) ~~ EndOfString :> a],

Row@(Rest@#)} & /@

DeleteCases[

Prepend[#[[1, 2 ;; -1]],

ReverseSortBy[Tally[First /@ #], Last][[1, 1]]] & /@

GatherBy[{#[[1]], #[[2]]} &@*Values /@

RandomSample[

Normal[ResourceData[

"Genetic Sequences for the SARS-CoV-2 Coronavirus"][

Select[And[

StringContainsQ[#GenBankTitle,

"complete genome"], #Host ===

Entity["Species",

"Species:HomoSapiens"]] &], {"Sequence",

"GeographicLocation"}]], 2000], Rest], {_, _Missing}]]

|

We should be careful, however, since we only chose a random sample here out of two thousand genetic sequences to analyze. Running the code again has a small chance of giving different results! To ensure that we are correct in our analysis, it is worth exploring the entirety of the genetic sequences available to us.

I also came across a lot of other cool things that I never imagined I could visualize! One of my favorite datasets in the Data Repository was the patient medical dataset. Now, this dataset is perhaps the largest out of all the datasets I analyzed, containing over 690,000 cells filled with all kinds of information. The most interesting visualization coming out of that dataset was something that would be very helpful in the study and implementation of contact tracing—a key to slowing down the pandemic.

By combining the travel histories and places of residence of patients, we can visualize the spread of COVID-19 across borders:

✕

GeoGraphics[{Red, Thickness[.001], Arrowheads[0.015],

ResourceData["Patient Medical Data for Novel Coronavirus COVID-19"][

Select[(FreeQ[#TravelHistoryLocation, _Missing] && !

MissingQ[#City]) &],

Arrow@GeoPath@Append[#TravelHistoryLocation, #City] &]},

GeoRange -> "World", PlotLabel -> "Patient Travel History",

ImageSize -> Large]

|

By the end of the development of the course, I was a much better educator, content creator and coder, equipped with the knowledge of how powerful computation can be in understanding this pandemic.

This was far from a personal project, however. In all facets of this project, from the ideas and the execution of the videos to the nitty-gritty of grammar and audio settings, I was assisted by different teams at Wolfram Research. Perhaps top of that list is my manager, Mads, with whom I communicated every day, sending him ideas to consider, notebooks to review and videos to listen to. Mads helped facilitate many discussions with other people at Wolfram to get their technical feedback. As I mentioned previously, I am no expert in genetic analysis, and neither is Mads. But John Cassel is! With his help, I was able to develop a coherent, accurate and helpful video touching on key aspects of the computational analysis of genetic data.

The toughest two videos to create were perhaps the last two: epidemic modeling. My cycle of curating lesson plans, typing up notebooks and recording videos was rather hectic: Inevitably, there’s a lot of “buffer time” on any one specific video. I might have had to wait for Mads’s feedback, someone else’s feedback, a meeting with a content expert and so on. So, in an effort to be productive, I would bounce around from video to video. However, Mads told me to not worry about the epidemic modeling videos until we were done with the rest of the course. Those would take a lot of time.

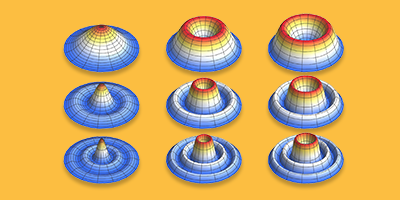

And he was right! I was then exposed to a whole new sphere of computational analysis: modeling. Luckily, we knew someone with just the right expertise to help out: Robert Nachbar. Robert was key in the development of these videos. Not only was his CompartmentalModeling package helpful in schematically representing epidemic models, but his detailed feedback also polished up the epidemic modeling videos considerably.

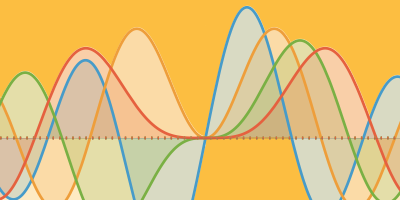

I ended up exploring some simple compartmental models, which epidemiologists use to model epidemics. The simplest is the SIR (susceptible–infectious–recovered) model. Essentially, it models the epidemic based on people switching around these compartments at a certain rate, which is expressed through a system of differential equations.

We can easily visualize this model using NDSolve to get a solution:

✕

DynamicModule[{equationS, equationI, equationR, b, k, solution},

equationS = s'[t] == -b s[t] i[t];

equationI = i'[t] == b s[t] i[t] - k i[t];

equationR = r'[t] == k i[t];

b = .5;

k = 1/14;

solution =

NDSolve[{equationS, equationI, equationR, s[0] == 0.9999,

i[0] == 0.0001, r[0] == 0.0000}, {s, r, i}, {t, 100}];

Plot[{First[s /. solution][t], First[i /. solution][t],

First[r /. solution][t]},

Sequence[{t, 0, 100}, PlotRange -> {0, 1.01},

PlotStyle -> {Blue, Red, Green},

PlotLegends -> {"Susceptible", "Infected", "Recovered"},

AxesLabel -> {"Number of days", "Proportion of population"},

ImageSize -> Large]]]

|

We can also vary the parameters and play with the model.

✕

DynamicModule[{equationS, equationI, equationR},

equationS = s'[t] == -b s[t] i[t];

equationI = i'[t] == b s[t] i[t] - k i[t];

equationR = r'[t] == k i[t];

Manipulate[

Show[Plot[#[t], {t, 0, 100}, PlotRange -> All,

AxesOrigin -> {0, 0}] & /@ Values@Flatten@Block[{b = x,

k = y},

NDSolve[{equationS, equationI, equationR, s[0] == 0.9999,

i[0] == 0.0001, r[0] == 0.0000}, {s, r, i}, {t,

100}]]], {{x, .5, "b (probability of infection)"}, 0,

1}, {{y, .1, "k (1/time of recovery)"}, 0, 1},

SaveDefinitions -> True]]

|

At the end of the day, this was far from a single-person project. From Mads’s constant feedback to the video team’s (very) keen ear, this journey would not be complete without my colleagues and Wolfram Community. And for that, I’m extremely thankful.

In the spirit of this post, I encourage you to play around and experiment with some of the visualizations I included here and explore even more on your own. I’m very happy with the final result and hope you will take the course and learn some exciting data analysis and visualization skills you can apply to your next project! If you would like to participate in a more detailed look beyond what is covered in the seven original videos, consider signing up for the upcoming Study Group that accompanies the course.

| Check out the COVID-19 Data Analysis and Visualization course and accompanying Study Group, plus a variety of other courses for students and professionals at Wolfram U. |

Hi Hamza

Thank you for your detailed article on the data analysis and visualization of Covid-19. I was interested in your statement and diagram that “a slight positive correlation is found between per-capita income and COVID-19 death rate”. I would think that such a result is likely due to cointegration of both variables with age, which is slightly hinted at in your next diagram between median age and death rate. There is a very strong correlation between age and Covid death rate, as well a correlation between age and income. The combination of these two relations would result in a correlation between Covid death rate and income. It is a well understood medical phenomenon that as people grow older then their immune system becomes weaker to new viruses.

Regards

Michael