Learning to Listen: Neural Networks Application for Recognizing Speech

Introduction

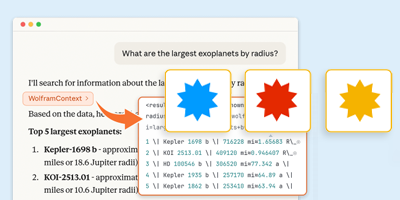

Recognizing words is one of the simplest tasks a human can do, yet it has proven extremely difficult for machines to achieve similar levels of performance. Things have changed dramatically with the ubiquity of machine learning and neural networks, though: the performance achieved by modern techniques is dramatically higher compared with the results from just a few years ago. In this post, I’m excited to show a reduced but practical and educational version of the speech recognition problem—the assumption is that we’ll consider only a limited set of words. This has two main advantages: first of all, we have easy access to a dataset through the Wolfram Data Repository (the Spoken Digit Commands dataset), and, maybe most importantly, all of the classifiers/networks I’ll present can be trained in a reasonable time on a laptop.

It’s been about two years since the initial introduction of the Audio object into the Wolfram Language, and we are thrilled to see so many interesting applications of it. One of the main additions to Version 11.3 of the Wolfram Language was tight integration of Audio objects into our machine learning and neural net framework, and this will be a cornerstone in all of the examples I’ll be showing today.

Without further ado, let’s squeeze out as much information as possible from the Spoken Digit Commands dataset!

The Data

Let’s get started by accessing and inspecting the dataset a bit:

✕

ro=ResourceObject["Spoken Digit Commands"] |

The dataset is a subset of the Speech Commands dataset released by Google. We wanted to have a “spoken MNIST,” which would let us produce small, self-enclosed examples of machine learning on audio signals. Since the Spoken Digit Commands dataset is a ResourceObject, it’s easy to get all the training and testing data within the Wolfram Language:

✕

trainingData=ResourceData[ro,"TrainingData"]; testingData=ResourceData[ro,"TestData"]; RandomSample[trainingData,3]//Dataset |

One important thing we made sure of is that the speakers in the training and testing sets are different. This means that in the testing phase, the trained classifier/network will encounter speakers that it has never heard before.

✕

Intersection[trainingData[[All,"SpeakerID"]],testingData[[All,"SpeakerID"]]] |

The possible output values are the digits from 0 to 9:

✕

classes=Union[trainingData[[All,"Output"]]] |

Conveniently, the length of all the input data is between .5 and 1 seconds, with the majority for the signals being one second long:

✕

Dataset[trainingData][Histogram[#,ScalingFunctions->"Log"]&@*Duration,"Input"] |

Encoders

In Version 11.3, we built a collection of audio encoders in NetEncoder and properly integrated it into the rest of the machine learning and neural net framework. Now we can seamlessly extract features from a large collection of audio recordings; inject them into a net; and train, test and evaluate networks for a variety of applications.

Since there are multiple features that one might want to extract from an audio signal, we decided that it was a good idea to have one encoder per feature rather than a single generic "Audio" one. Here is the full list:

• "Audio"

• "AudioSTFT"

• "AudioSpectrogram"

• "AudioMelSpectrogram"

• "AudioMFCC"

The first step (which is common in all encoders) is the preprocessing: the signal is reduced to a single channel, resampled to a fixed sample rate and can be padded or trimmed to a specified duration.

The simplest one is NetEncoder["Audio"], which just returns the raw waveform:

✕

encoder=NetEncoder["Audio"] |

✕

encoder[RandomChoice[trainingData]["Input"]]//Flatten//ListLinePlot |

The starting point for all of the other audio encoders is the short-time Fourier transform, where the signal is partitioned in (potentially overlapping) chunks, and the Fourier transform is computed on each of them. This way we can get both time (since each chunk is at a very specific time) and frequency (thanks to the Fourier transform) information. We can visualize this process by using the Spectrogram function:

✕

a=AudioGenerator[{"Sin",TimeSeries[{{0,1000},{1,4000}}]},2];

Spectrogram[a]

|

The main parameters for this operation that are common to all of the frequency domain features are WindowSize and Offset, which control the sizes of the chunks and their offsets.

Each NetEncoder supports the "TargetLength" option. If this is set to a specific number, the input audio will be trimmed or padded to the correct duration; otherwise, the length of the output of the NetEncoder will depend on the length of the original signal.

For the scope of this blog post, I’ll be using the "AudioMFCC" NetEncoder, since it is a feature that packs a lot of information about the signal while keeping the dimensionality low:

✕

encoder=NetEncoder[{"AudioMFCC","TargetLength"->All,"SampleRate"->16000,"WindowSize" -> 1024,"Offset"-> 570,"NumberOfCoefficients"->28,"Normalization"->True}]

encoder[RandomChoice[trainingData]["Input"]]//Transpose//MatrixPlot

|

As I mentioned at the beginning, these encoders are quite fast: this specific one on my not-very-new machine runs through all 10,000 examples in slightly more than two seconds:

✕

encoder[trainingData[[All,"Input"]]];//AbsoluteTiming |

Machine Learning, the Automated Way

Now we have the data and an efficient way of extracting features. Let’s find out what Classify can do for us.

To start, let’s massage our data into a format that Classify would be happier with:

✕

classifyTrainingData = #Input -> #Output & /@ trainingData; classifyTestingData = #Input -> #Output & /@ testingData; |

Classify does have some trouble dealing with variable-length sequences (which hopefully will be improved on soon), so we’ll have to find ways to work around that.

Mean of MFCC

To make the problem simpler, we can get rid of the variable length of the features. One naive way is to compute the mean of the sequence:

✕

cl=Classify[classifyTrainingData,FeatureExtractor->(Mean@*encoder),PerformanceGoal->"Quality"]; |

The result is a bit disheartening, but not unexpected, since we are trying to summarize each signal with only 28 parameters. Not stunning.

✕

cm=ClassifierMeasurements[cl,classifyTestingData]; cm["Accuracy"] cm["ConfusionMatrixPlot"] |

![]()

Adding Some Statistics

To improve the results of Classify, we can feed it more information about the signal by adding the standard deviation of each sequence as well:

✕

cl=Classify[classifyTrainingData,FeatureExtractor->(Flatten[{Mean[#],StandardDeviation[#]}]&@*encoder),PerformanceGoal->"Quality"];

|

Some effort does pay off:

✕

cm=ClassifierMeasurements[cl,classifyTestingData]; cm["Accuracy"] cm["ConfusionMatrixPlot"] |

![]()

Even More Statistics

We can follow this strategy a bit more, and also add the Kurtosis of the sequence:

✕

cl=Classify[classifyTrainingData,FeatureExtractor->(Flatten[{Mean[#],StandardDeviation[#],Kurtosis[#]}]&@*encoder),PerformanceGoal->"Quality"];

|

The improvement is not as huge, but it is there:

✕

cm=ClassifierMeasurements[cl,classifyTestingData]; cm["Accuracy"] cm["ConfusionMatrixPlot"] |

![]()

Fixed-Length Sequences

We could continue dripping information about statistics of the sequences, with smaller and smaller returns. But with this specific dataset, we can follow a simpler strategy: remember how we noticed that most recordings were about 1 second long? That means that if we fix the length of the extracted feature to the equivalent of 1 second (about 28 frames) using the "TargetLength" option, the encoder will take care of doing the padding or trimming as appropriate. This way, all the inputs to Classify will have the same dimensions of {28,28}:

✕

encoderFixed=NetEncoder[{"AudioMFCC","TargetLength"->28,"SampleRate"->16000,"WindowSize" -> 1024,"Offset"-> 570,"NumberOfCoefficients"->28,"Normalization"->True}]

|

✕

cl=Classify[classifyTrainingData,FeatureExtractor->encoderFixed,PerformanceGoal->"DirectTraining"]; |

The training time is longer, but we do still get an accuracy bump:

✕

cm=ClassifierMeasurements[cl,classifyTestingData]; cm["Accuracy"] cm["ConfusionMatrixPlot"] |

![]()

This is about as far as we can get with Classify and low-level features. Time to ditch the automation and to bring out the neural networks machinery!

Convolutional Neural Network

Let’s remember that we’re playing with a spoken versions of MNIST, so what could be a better starting place than LeNet? This is a network that is often used as a benchmark on the standard image MNIST, and is very fast to train (even without GPU).

We’ll use the same strategy as in the last Classify example: we’ll fix the length of the signals to about one second, and we’ll tune the parameters of the NetEncoder so that the input will have the same dimensions of the MNIST images. This is one of the reasons we can confidently use a CNN architecture for this job: we are dealing with 2D matrices (images, in essence—actually, that’s how we usually look at MFCC), and we want the network to infer information from their structures.

Let’s grab LeNet from NetModel:

✕

lenet=NetModel["LeNet Trained on MNIST Data","UninitializedEvaluationNet"] |

Since the "AudioMFCC" NetEncoder produces two-dimensional data (time x frequency), and the net requires three-dimensional inputs (where the first dimensions are the channel dimensions), we can use ReplicateLayer to make them compatible:

✕

lenet=NetPrepend[lenet,ReplicateLayer[1]] |

Using NetReplacePart, we can attach the "AudioMFCC" NetEncoder to the input and the appropriate NetDecoder to the output:

✕

audioLeNet=NetReplacePart[lenet,

{

"Input"->NetEncoder[{"AudioMFCC","TargetLength"->28,"SampleRate"->16000,"WindowSize" -> 1024,"Offset"-> 570,"NumberOfCoefficients"->28,"Normalization"->True}],

"Output"->NetDecoder[{"Class",classes}]

}

]

|

To speed up convergence and prevent overfitting, we can use NetReplace to add a BatchNormalizationLayer after every convolution:

✕

audioLeNet=NetReplace[audioLeNet,{x_ConvolutionLayer:>NetChain[{x,BatchNormalizationLayer[]}]}]

|

NetInformation allows us to visualize at a glance the net’s structure:

✕

NetInformation[audioLeNet,"SummaryGraphic"] |

Now our net is ready for training! After defining a validation set on 5% of the training data, we can let NetTrain worry about all hyperparameters:

✕

resultObject=NetTrain[ audioLeNet, trainingData, All, ValidationSet->Scaled[.05] ] |

Seems good! Now we can use ClassifierMeasurements on the net to measure the performance:

✕

cm=ClassifierMeasurements[resultObject["TrainedNet"],classifyTestingData]; cm["Accuracy"] cm["ConfusionMatrixPlot"] |

![]()

It looks like the added effort paid off!

Recurrent Neural Network

We can also embrace the variable-length nature of the problem by specifying "TargetLength"→All in the encoder:

✕

encoder=NetEncoder[{"AudioMFCC","TargetLength"->All,"NumberOfCoefficients"->28,"SampleRate"->16000,"WindowSize" -> 1024,"Offset"-> 571,"Normalization"->True}]

|

This time we’ll use an architecture based on the GatedRecurrentLayer. Used on its own, it returns its state per each time step, but we are only interested in the classification of the entire sequence, i.e. we want a single output for all time steps. We can use SequenceLastLayer to extract the last state for the sequence. After that, we can add a couple of fully connected layers to do the classification:

✕

rnn=

NetChain[{

GatedRecurrentLayer[32,"Dropout"->{"VariationalInput"->0.3}],

GatedRecurrentLayer[64,"Dropout"->{"VariationalInput"->0.3}],

SequenceLastLayer[],

LinearLayer[64],

Ramp,

LinearLayer[Length@classes],

SoftmaxLayer[]},

"Input"->encoder,

"Output"->NetDecoder[{"Class",classes}]

]

|

Again, we’ll let NetTrain worry about all hyperparameters:

✕

resultObjectRNN=NetTrain[ rnn, trainingData, All, ValidationSet->Scaled[.05] ] |

… and measure the performance:

✕

cm=ClassifierMeasurements[resultObjectRNN["TrainedNet"],classifyTestingData]; cm["Accuracy"] cm["ConfusionMatrixPlot"] |

![]()

It seems that treating the input as a pure sequence and letting the network figure out how to extract meaning from it works quite well!

An Interlude

Now that we have some trained networks, we can play with them a bit. First of all, let’s take the recurrent network and chop off the last two layers:

✕

choppedNet=NetTake[resultObjectRNN["TrainedNet"],{1,5}]

|

This leaves us with something that produces a vector of 64 numbers per each input signal. We can try to use this chopped network as a feature extractor and plot the results:

✕

FeatureSpacePlot[Style[#["Input"],ColorData[97][#["Output"]+1]]->#["Output"]&/@testingData,FeatureExtractor->choppedNet] |

It looks like the various classes get properly separated!

We can also record a signal, and test the trained network on it:

|

✕

a=AudioTrim@AudioCapture[] |

✕

resultObjectRNN["TrainedNet"][a] |

RNN Using CTC Loss

We can attempt something more adventurous on this dataset: up until now, we have simply done classification (a sequence goes in, a single class comes out). What if we tried transduction: a sequence (the MFCC features) goes in, and another sequence (the characters) comes out?

First of all, let’s add string labels to our data:

✕

labels = <|0 -> "zero", 1 -> "one", 2 -> "two", 3 -> "three", 4 -> "four", 5 -> "five", 6 -> "six", 7 -> "seven", 8 -> "eight", 9 -> "nine"|>; trainingDataString = Append[#, "Target" -> labels[#Output]] & /@ trainingData; testingDataString = Append[#, "Target" -> labels[#Output]] & /@ testingData; |

We need to remember that once trained, this will not be a general speech-recognition network: it will only have been exposed to one word at a time, only to a limited set of characters and only 10 words!

✕

Union[Flatten@Characters@Values@labels]//Sort |

A recurrent architecture would output a sequence of the same length as the input, which is not what we want. Luckily, we can use the CTCBeamSearch NetDecoder to take care of this. Say that the input sequence is n steps long, and the decoding has m different classes: the NetDecoder will expect an input of dimensions  (there are m possible states, plus a special blank character). Given this information, the decoder will find the most likely sequence of states by collapsing all of the ones that are not separated by the blank symbol.

(there are m possible states, plus a special blank character). Given this information, the decoder will find the most likely sequence of states by collapsing all of the ones that are not separated by the blank symbol.

Another difference with the previous architecture will be the use of NetBidirectionalOperator. This operator applies a net to a sequence and its reverse, catenating both results into one single output sequence:

✕

net=NetGraph[{NetBidirectionalOperator@GatedRecurrentLayer[64,"Dropout"->{"VariationalInput"->0.4}],

NetBidirectionalOperator@GatedRecurrentLayer[64,"Dropout"->{"VariationalInput"->0.4}],

NetMapOperator[{LinearLayer[128],Ramp,LinearLayer[],SoftmaxLayer[]}]},

{NetPort["Input"]->1->2->3->NetPort["Target"]},

"Input"->NetEncoder[{"AudioMFCC","TargetLength"->All,"NumberOfCoefficients"->28,"SampleRate"->16000,"WindowSize" -> 1024,"Offset"-> 571,"Normalization"->True}],

"Target"->NetDecoder[{"CTCBeamSearch",Alphabet[]}]]

|

To train the network, we need a way to compute the loss that takes the decoding into account. This is what the CTCLossLayer is for:

✕

trainedCTC=NetTrain[net,trainingDataString,LossFunction->CTCLossLayer["Target"->NetEncoder[{"Characters",Alphabet[]}]],ValidationSet->Scaled[.05],MaxTrainingRounds->20];

|

Let’s pick a random example from the test set:

✕

a=RandomChoice@testingDataString |

Look at how the trained network behaves:

✕

trainedCTC[a["Input"]] |

We can also look at the output of the net just before the CTC decoding takes place. This represents the probability of each character per time step:

✕

probabilities=NetReplacePart[trainedCTC,"Target"->None][a["Input"]];

ArrayPlot[Transpose@probabilities,DataReversed->True,FrameTicks->{Thread[{Range[26],Alphabet[]}],None}]

|

We can also show these probabilities superimposed on the spectrogram of the signal:

✕

Show[{ArrayPlot[Transpose@probabilities,DataReversed->True,FrameTicks->{Thread[{Range[26],Alphabet[]}],None}],Graphics@{Opacity[.5],Spectrogram[a["Input"],DataRange->{{0,Length[probabilities]},{0,27}},PlotRange->All][[1]]}}]

|

There is definitely the possibility that the network would make small spelling mistakes (e.g. “sixo” instead of “six”). We can visually inspect these spelling mistakes by applying the net to all classes and get a WordCloud of them:

✕

WordCloud[StringJoin/@trainedCTC[#[[All,"Input"]]]]&/@GroupBy[testingDataString,Last] |

Most of these spelling mistakes are quite small, and a simple Nearest function might be enough to correct them:

✕

nearest=First@*Nearest[Values@labels]; nearest["sixo"] |

To measure the performance of the net and the Nearest function, first we need to define a function that, given an output for the net (a list of characters), computes the probability per each class:

✕

probs=AssociationThread[Values[labels]->0];

getProbabilities[chars:{___String}]:=Append[probs,nearest[StringJoin[chars]]->1]

|

Let’s check that it works:

✕

getProbabilities[{"s","i","x","o"}]

getProbabilities[{"f","o","u","r"}]

|

![]()

![]()

Now we can use ClassifierMeasurements by giving an association of probabilities and the correct labels per each example as input:

✕

cm=ClassifierMeasurements[getProbabilities/@trainedCTC[testingDataString[[All,"Input"]]],testingDataString[[All,"Target"]]] |

The accuracy is quite high!

✕

cm["Accuracy"] cm["ConfusionMatrixPlot"] |

![]()

Encoder/Decoder

Up till now, the architectures we have been experimenting with are fairly straightforward. We can now attempt to do something more ambitious: an encoder/decoder architecture. The basic idea is that we’ll have two main components in the net: the encoder, whose job is to encode all the information about the input features into a single vector (of 128 elements, in our case); and the decoder, which will take this vector (the “encoded” version of the input) and be able to produce a “translation” of it as a sequence of characters.

Let’s define the NetEncoder that will deal with the strings:

✕

targetEnc=NetEncoder[{"Characters",{Alphabet[],{StartOfString,EndOfString}->Automatic},"UnitVector"}]

|

… and the one that will deal with the Audio objects:

✕

inputEnc=NetEncoder[{"AudioMFCC","TargetLength"->All,"NumberOfCoefficients"->28,"SampleRate"->16000,"WindowSize" -> 1024,"Offset"-> 571,"Normalization"->True}]

|

Our encoder network will consist of a single GatedRecurrentLayer and a SequenceLastLayer to extract the last state, which will become our encoded representation of the input signal:

✕

encoderNet=NetChain[{GatedRecurrentLayer[128,"Dropout"->{"VariationalInput"->0.3}],SequenceLastLayer[]}]

|

The decoder network will take a vector of 128 elements and a sequence of vectors as input, and will return a sequence of vectors:

✕

decoderNet=NetGraph[{

SequenceMostLayer[],

GatedRecurrentLayer[128,"Dropout"->{"VariationalInput"->0.3}],

NetMapOperator[LinearLayer[]],

SoftmaxLayer[]},

{NetPort["Input"]->1->2->3->4,

NetPort["State"]->NetPort[2,"State"]}

]

|

We then need to define a network to train the encoder and decoder. This configuration is usually called a “teacher forcing” network:

✕

teacherForcingNet=NetGraph[<|"encoder"->encoderNet,"decoder"->decoderNet,"loss"->CrossEntropyLossLayer["Probabilities"],"rest"->SequenceRestLayer[]|>,

{NetPort["Input"]->"encoder"->NetPort["decoder","State"],

NetPort["Target"]->NetPort["decoder","Input"],

"decoder"->NetPort["loss","Input"],

NetPort["Target"]->"rest"->NetPort["loss","Target"]},

"Input"->inputEnc,"Target"->targetEnc]

|

Using NetInformation, we can look at the whole structure with one glance:

✕

NetInformation[teacherForcingNet,"FullSummaryGraphic"] |

The idea is that the decoder is presented with the encoded input and most of the target, and its job is to predict the next character. We can now go ahead and train the net:

✕

trainedEncDec=NetTrain[teacherForcingNet,trainingDataString,ValidationSet->Scaled[.05]] |

Now let’s inspect what happened. First of all, we have a trained encoder:

✕

trainedEncoder=NetReplacePart[NetExtract[trainedEncDec,"encoder"],"Input"->inputEnc] |

This takes an Audio object and outputs a single vector of 150 elements. Hopefully, all of the interesting information of the original signal is included here:

✕

example=RandomChoice[testingDataString] |

Let’s use the trained encoder to encode the example input:

✕

encodedVector=trainedEncoder[example["Input"]]; ListLinePlot[encodedVector] |

Of course, this doesn’t tell us much on its own, but we could use the trained encoder as feature extractor to visualize all of the testing set:

✕

FeatureSpacePlot[Style[#["Input"],ColorData[97][#["Output"]+1]]->#["Output"]&/@testingData,FeatureExtractor->trainedEncoder] |

To extract information from the encoded vector, we need help from our trusty decoder (which has been trained as well):

✕

trainedDecoder=NetExtract[trainedEncDec,"decoder"] |

Let’s add some processing of the input and output:

✕

decoder=NetReplacePart[trainedDecoder,{"Input"->targetEnc,"Output"->NetDecoder[targetEnc]}]

|

If we feed the decoder the encoded state and a seed string to start the reconstruction and iterate the process, the decoder will do its job nicely:

✕

res=decoder[<|"State"->encodedVector,"Input"->"c"|>] res=decoder[<|"State"->encodedVector,"Input"->res|>] res=decoder[<|"State"->encodedVector,"Input"->res|>] |

![]()

![]()

![]()

We can make this decoding process more compact, though; we want to construct a net that will compute the output automatically until the end-of-string character is reached. As a first step, let’s extract the two main components of the decoder net:

✕

gru=NetExtract[trainedEncDec,{"decoder",2}]

linear=NetExtract[trainedEncDec,{"decoder",3,"Net"}]

|

Define some additional processing of the input and output of the net that includes special classes to indicate the start and end of the string:

✕

classEnc=NetEncoder[{"Class",Append[Alphabet[],StartOfString],"UnitVector"}];

classDec=NetDecoder[{"Class",Append[Alphabet[],EndOfString]}];

|

Define a character-level predictor that takes a single character, runs one step of the GatedRecurrentLayer and produces a single softmax prediction:

✕

charPredictor=NetChain[{ReshapeLayer[{1,27}],gru,ReshapeLayer[{128}],linear,SoftmaxLayer[]},"Input"->classEnc,"Output"->classDec]

|

Now we can use NetStateObject to inject the encoded vector into the state of the recurrent layer:

✕

sobj=NetStateObject[charPredictor,<|{2,"State"}->encodedVector|>]

|

If we now feed this predictor the StartOfString character, this will predict the next character:

✕

sobj[StartOfString] |

Then we can iterate the process:

✕

sobj[%] sobj[%] sobj[%] |

![]()

![]()

![]()

We can now encapsulate this process in a single function:

✕

predict[input_]:=Module[{encoded,sobj,res},

encoded=trainedEncoder[input];

sobj=NetStateObject[charPredictor,<|{2,"State"}->encoded|>];

res=NestWhileList[sobj,StartOfString,#=!=EndOfString&];

StringJoin@res[[2;;-2]]

]

|

This way, we can directly compute the full output:

✕

predict[example["Input"]] |

Again, we need to define a function that, given an output for the net, computes the probability per each class:

✕

probs=AssociationThread[Values[labels]->0]; getProbabilities[in_]:=Append[probs,nearest@predict[in]->1]; |

Now we can use ClassifierMeasurements by giving as input an association of probabilities and the correct labels per each example:

✕

cm=ClassifierMeasurements[getProbabilities/@testingDataString[[All,"Input"]],testingDataString[[All,"Target"]]] |

✕

cm["Accuracy"] cm["ConfusionMatrixPlot"] |

![]()

Audio signals are less ubiquitous than images in the machine learning world, but that doesn’t mean they are less interesting to analyze. As we continue to complete and optimize audio analysis using modern machine learning and neural net approaches in the Wolfram Language, we are also excited to use it ourselves to build high-level applications in the domains of speech analysis, music understanding and many other areas.

Download this post as a Wolfram Notebook.

Could you also post the Notebook :) !

Great post

Thanks for the feedback. The notebook has been added.

A bit complicated, but very interesting – had to read the article a couple of times. Great post! :)

How does it perform with different accents?

Hi Bruce. Thanks for reaching out. Could you clarify your question to specify what exactly you mean by “it”? That would really help in providing a meaningful answer to your question!

A bit complicated indeed, had to read it several times (just like Christian), but a very interesting topic. Thanks for the Post!