How Do YOU Type “wolfram”? Analyzing Your Typing Style Using Mathematica

Wouldn’t it be cool if you never had to remember another password again?

I read an article in The New York Times recently about using individual typing styles to identify people. A computer could authenticate you based on how you type your user name without ever requiring you to type a password.

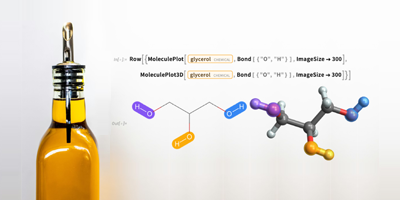

To continue our series of posts about personal analytics, I want to show you how you can do a detailed analysis of your own typing style just by using Mathematica!

Here’s a fun little application that analyzes the way you type the word “wolfram.” It’s an embedded Computable Document Format (CDF) file, so you can try it out right here in your browser. Type “wolfram” into the input field and click the “save” button (or just press “Enter” on your keyboard). A bunch of charts will appear showing the time interval between each successive pair of characters you typed: w–o, o–l, l–f, f–r, r–a, and a–m. Do several trials: type “wolfram,” click “save,” rinse, and repeat (if you make a typo, that trial will just be ignored).

After a few trials the bar chart showing the average intervals should stop changing much between trials. Here’s what mine looked like after five trials:

You can see in the history panel on the top right that each trial has a fairly consistent profile. The time series plots in the middle show how the interval for each letter pair changed from trial to trial. The statistics above each time series plot show that the standard deviation is hovering around 10 milliseconds (ms). That’s pretty consistent! Maybe that’s because I typed “wolfram” about a thousand times while preparing this blog post. :)

Here’s what it looked like after 50 trials:

Notice in the plot on the bottom left how the interval distribution is basically bimodal, with o–l (green) and f–r (red) hovering around 150 ms, while all the other key pairs (w–o, l–f, r–a, and a–m) are clustered around 75 ms. That’s interesting because o–l and f–r are both key pairs that are typed with the same finger on the same hand.

So what about letters involving the same hand but different fingers? Well, the r–a interval requires me to hit “r” with my left index finger and then “a” using my left pinky, and it’s one of the fastest intervals for me at an average of 70 ms.

So it seems like I can transition between letters quickly using the same hand, as long as it’s using different fingers. But as soon as I’m using the same finger for a transition, it takes about twice as long.

Now I’m curious if faster transitions have more or less fluctuation. Do you pay a price for speed with more inconsistency? I’d like to see a plot of interval fluctuation versus interval average. And the measure of fluctuation that I think makes the most sense here is to divide the standard deviation by the mean (which is called relative standard deviation), giving fluctuation as a fraction of the mean.

To do this analysis I’ll need the raw data for all 50 trials, so I click the “data” tab and then click “copy to clipboard”:

After pasting the data into a notebook and assigning it to a variable called keydata, I get a list of trials for each interval like so:

![]()

![]()

![]()

For example, here’s the w–o interval for all 50 trials (in seconds):

![]()

Now I create pairs of the form {mean, sd/mean} for each interval:

![]()

![]()

And plot them together with a linear fit:

![]()

![]()

![]()

![Labeled[ListPlot[List /@ meanrelativesd, Frame → True, FrameStyle → Gray, FrameTicksStyle → Black, AxesOrigin → {0, 0}, PlotRange → {{0.04, 0.17}, {0, 0.45}}, PlotMarkers → (MapThread[{Graphics[{#2, Circle[{0, 0}], Text[#1]}], 0.12} &, {pchars, colors}]), FrameTicks → {{Range[0.1, 0.4, 0.1], None}, {{#, Round[1000*#]} & /@ Range[0.05, 0.2, 0.05], None}}, Epilog → {Red, Opacity[0.8], Thickness[0.004], Line[{{0.05, y[0.05]}, {0.16, y[0.16]}}]}], {Style["relative standard deviation", FontFamily → "Helvetica"], Row[{Style["mean", FontFamily → "Helvetica"], Style["(ms)", Gray, FontFamily → "Helvetica"]}, " "]}, {Left, Bottom}, RotateLabel → True] Labeled[ListPlot[List /@ meanrelativesd, Frame → True, FrameStyle → Gray, FrameTicksStyle → Black, AxesOrigin → {0, 0}, PlotRange → {{0.04, 0.17}, {0, 0.45}}, PlotMarkers → (MapThread[{Graphics[{#2, Circle[{0, 0}], Text[#1]}], 0.12} &, {pchars, colors}]), FrameTicks → {{Range[0.1, 0.4, 0.1], None}, {{#, Round[1000*#]} & /@ Range[0.05, 0.2, 0.05], None}}, Epilog → {Red, Opacity[0.8], Thickness[0.004], Line[{{0.05, y[0.05]}, {0.16, y[0.16]}}]}], {Style["relative standard deviation", FontFamily → "Helvetica"], Row[{Style["mean", FontFamily → "Helvetica"], Style["(ms)", Gray, FontFamily → "Helvetica"]}, " "]}, {Left, Bottom}, RotateLabel → True]](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In14.png)

There does appear to be lower relative fluctuation for the slower intervals o–l and f–r, at least for my typing style.

The question we really want to answer is whether people can be identified just by the way they type. How could we test that?

Well it just so happens that Wolfram is a company full of data nerds just like me, so I sent out an email asking people to do a bunch of trials with this interface and send me their data. A total of 42 people responded:

![wolframdata = ReadList[FileNameJoin[{NotebookDirectory[], "wolfram-data.txt"}], Expression]; wolframdata = ReadList[FileNameJoin[{NotebookDirectory[], "wolfram-data.txt"}], Expression];](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In15.png)

![]()

![]()

People did 12 trials on average—some did as many as 30, and others only did a few:

![]()

![]()

![]()

![]()

Here are the average interval charts for each person. You can see there’s quite a range of profiles:

![]()

![Grid@Partition[keychart[#, ImageSize → 50, ChartStyle → (Directive[Opacity[0.6], #] & /@ colors)] & /@ meanswolfram, 7, 7, 1, {}] Grid@Partition[keychart[#, ImageSize → 50, ChartStyle → (Directive[Opacity[0.6], #] & /@ colors)] & /@ meanswolfram, 7, 7, 1, {}]](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In20.png)

And here’s the combined distribution for everyone:

![intervalswolfram = Flatten /@Transpose[Transpose[Differences /@ #[[All, All, 2]]] & /@ wolframdata]; intervalswolfram = Flatten /@Transpose[Transpose[Differences /@ #[[All, All, 2]]] & /@ wolframdata];](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In22.png)

![Histogram[Flatten[intervalswolfram], 300, PlotRange → {{0, 0.4}, All}, PerformanceGoal → "Speed", Frame → True, FrameStyle → Gray, FrameTicksStyle → Black, FrameTicks → {{None, None}, {{#, Round[1000*#]} & /@ FindDivisions[{#1, #2, 0.1}, 4] &, None}}, PlotRangePadding → {{Automatic, Automatic}, {None, Scaled[0.1]}}] Histogram[Flatten[intervalswolfram], 300, PlotRange → {{0, 0.4}, All}, PerformanceGoal → "Speed", Frame → True, FrameStyle → Gray, FrameTicksStyle → Black, FrameTicks → {{None, None}, {{#, Round[1000*#]} & /@ FindDivisions[{#1, #2, 0.1}, 4] &, None}}, PlotRangePadding → {{Automatic, Automatic}, {None, Scaled[0.1]}}]](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In25.png)

There’s a hint of the same bimodal structure like there was for just my 50 trials. But which letter intervals are contributing to each peak? We can use ChartLayout → "Stacked" to find out:

![legend = Grid[{MapThread[Row[{Graphics[{#, EdgeForm[Darker[#]], Opacity[0.6], Rectangle[]}, ImageSize → 15], nicelabel@#2}, " ", Alignment → {Left, Baseline}] &, {colors, pchars}]}, Alignment → {Left, Baseline}, Spacings → {1, Automatic}]; Column[{Labeled[Histogram[intervalswolfram, 300, PlotRange → {{0, 0.4}, All}, ChartStyle → colors, ChartLayout → "Stacked", PerformanceGoal → "Speed", Frame → True, FrameStyle → Gray, FrameTicksStyle → Black, FrameTicks → {{None, None}, {{#, Round[1000*#]} & /@ FindDivisions[{#1, #2, 0.1}, 4] &, None}}, PlotRangePadding → {{Automatic, Automatic}, {None, Scaled[0.1]}}, ImageSize → 400, ImagePadding → {{10, 10}, {20, 5}}], Row[{Style["interval", FontFamily → "Helvetica"], Style["(ms)", Gray, FontFamily → "Helvetica"]}, " "], Bottom], legend}, Alignment → Center, Spacings → {Automatic, 1}] legend = Grid[{MapThread[Row[{Graphics[{#, EdgeForm[Darker[#]], Opacity[0.6], Rectangle[]}, ImageSize → 15], nicelabel@#2}, " ", Alignment → {Left, Baseline}] &, {colors, pchars}]}, Alignment → {Left, Baseline}, Spacings → {1, Automatic}]; Column[{Labeled[Histogram[intervalswolfram, 300, PlotRange → {{0, 0.4}, All}, ChartStyle → colors, ChartLayout → "Stacked", PerformanceGoal → "Speed", Frame → True, FrameStyle → Gray, FrameTicksStyle → Black, FrameTicks → {{None, None}, {{#, Round[1000*#]} & /@ FindDivisions[{#1, #2, 0.1}, 4] &, None}}, PlotRangePadding → {{Automatic, Automatic}, {None, Scaled[0.1]}}, ImageSize → 400, ImagePadding → {{10, 10}, {20, 5}}], Row[{Style["interval", FontFamily → "Helvetica"], Style["(ms)", Gray, FontFamily → "Helvetica"]}, " "], Bottom], legend}, Alignment → Center, Spacings → {Automatic, 1}]](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In26.png)

It looks like the o–l (green) and f–r (red) transitions are centered around 150 ms, with the others centered around 75 ms, just like they were for me.

What about the relative fluctuations? Now that we have data for the Wolfram population, we can see if that trend still holds:

![meanrelativesdwolfram = Mean /@ Transpose[{Mean@#, StandardDeviation@#/Mean@#} & /@ Transpose[Differences /@ #[[All, All, 2]]] & /@ wolframdata]; meanrelativesdwolfram = Mean /@ Transpose[{Mean@#, StandardDeviation@#/Mean@#} & /@ Transpose[Differences /@ #[[All, All, 2]]] & /@ wolframdata];](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In27.png)

![]()

![]()

![]()

![Labeled[ListPlot[List /@ meanrelativesdwolfram, Frame → True, FrameStyle → Gray, FrameTicksStyle → Black, AxesOrigin → {0, 0}, PlotRange → {{0.09, 0.18}, {0, 0.45}}, PlotMarkers → (MapThread[{Graphics[{#2, Circle[{0, 0}], Text[#1]}], 0.12} &, {pchars, colors}]), FrameTicks → {{Range[0.1, 0.4, 0.1], None}, {{#, Round[1000*#]} & /@ Range[0.05, 0.2, 0.05], None}}, Epilog → {Red, Opacity[0.8], Thickness[0.004], Line[{{0.05, wy[0.05]}, {0.18, wy[0.18]}}]}], {Style["relative standard deviation", FontFamily → "Helvetica"], Row[{Style["mean", FontFamily → "Helvetica"], Style["(ms)", Gray, FontFamily → "Helvetica"]}, " "]}, {Left, Bottom}, RotateLabel → True] Labeled[ListPlot[List /@ meanrelativesdwolfram, Frame → True, FrameStyle → Gray, FrameTicksStyle → Black, AxesOrigin → {0, 0}, PlotRange → {{0.09, 0.18}, {0, 0.45}}, PlotMarkers → (MapThread[{Graphics[{#2, Circle[{0, 0}], Text[#1]}], 0.12} &, {pchars, colors}]), FrameTicks → {{Range[0.1, 0.4, 0.1], None}, {{#, Round[1000*#]} & /@ Range[0.05, 0.2, 0.05], None}}, Epilog → {Red, Opacity[0.8], Thickness[0.004], Line[{{0.05, wy[0.05]}, {0.18, wy[0.18]}}]}], {Style["relative standard deviation", FontFamily → "Helvetica"], Row[{Style["mean", FontFamily → "Helvetica"], Style["(ms)", Gray, FontFamily → "Helvetica"]}, " "]}, {Left, Bottom}, RotateLabel → True]](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In32.png)

Yes, indeed! There does seem to be a trend of lower relative fluctuations for longer intervals. So it seems like in general the slower you type, the more consistent you’ll be.

Now let’s test how well these individuals can be identified by their typing styles.

The simplest way to test this is to take the trials from two people and see if a clustering algorithm can separate the trials cleanly into two distinct groups.

For example, here are the trials from two of the individuals (person 1 and person 6):

![indexed = MapIndexed[With[{i = #2[[1]]}, MapIndexed[With[{j = #2[[1]]}, Differences@# → {i, j}] &, #[[All, All, 2]]]] &, wolframdata]; indexed = MapIndexed[With[{i = #2[[1]]}, MapIndexed[With[{j = #2[[1]]}, Differences@# → {i, j}] &, #[[All, All, 2]]]] &, wolframdata];](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In33.png)

![Grid[{#[[1, 2, 1]], Framed@Grid[Partition[Map[keychart[#, ImageSize → 30] &, #[[All, 1]]], 12, 12, 1, {}], Spacings → {-0.05, 0.1}]} & /@ indexed[[{1, 6}]], Alignment → Left] Grid[{#[[1, 2, 1]], Framed@Grid[Partition[Map[keychart[#, ImageSize → 30] &, #[[All, 1]]], 12, 12, 1, {}], Spacings → {-0.05, 0.1}]} & /@ indexed[[{1, 6}]], Alignment → Left]](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In34.png)

Each trial (represented here by a bar chart) consists of a vector of six numbers, one number for each letter interval: (w–o, o–l, l–f, f–r, r–a, a–m). So each trial can be thought of as a point in six dimensions, where the similarity of two trials is just their Euclidean distance:

![FindClusters[Join[indexed[[1]], indexed[[6]]], 2, DistanceFunction → (EuclideanDistance[Normalize@#1, Normalize@#2] &)][[All, All, 1]] FindClusters[Join[indexed[[1]], indexed[[6]]], 2, DistanceFunction → (EuclideanDistance[Normalize@#1, Normalize@#2] &)][[All, All, 1]]](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In35.png)

![]()

FindClusters is able to partition these trials perfectly, so that all trials from person 1 are in the first cluster and all trials from person 6 are in the second cluster.

But if we try the same thing with person 1 and person 7, the majority of trials for both 1 and 7 end up in the second cluster, so they actually cluster quite poorly:

![Grid[{#[[1, 2, 1]], Framed@Grid[Partition[Map[keychart[#, ImageSize → 30] &, #[[All, 1]]], 12, 12, 1, {}], Spacings → {-0.05, 0.1}]} & /@ indexed[[{1, 7}]], Alignment → Left] Grid[{#[[1, 2, 1]], Framed@Grid[Partition[Map[keychart[#, ImageSize → 30] &, #[[All, 1]]], 12, 12, 1, {}], Spacings → {-0.05, 0.1}]} & /@ indexed[[{1, 7}]], Alignment → Left]](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In36.png)

![FindClusters[Join[indexed[[1]], indexed[[7]]], 2, DistanceFunction → (EuclideanDistance[Normalize@#1, Normalize@#2] &)][[All, All, 1]] FindClusters[Join[indexed[[1]], indexed[[7]]], 2, DistanceFunction → (EuclideanDistance[Normalize@#1, Normalize@#2] &)][[All, All, 1]]](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In37.png)

![]()

And here’s an intermediate case, where the clustering algorithm is able to mostly distinguish between person 1 and person 9, but not perfectly:

![Grid[{#[[1, 2, 1]], Framed@Grid[Partition[Map[keychart[#, ImageSize → 30] &, #[[All, 1]]], 12, 12, 1, {}], Spacings → {-0.05, 0.1}]} & /@ indexed[[{1, 9}]], Alignment → Left] Grid[{#[[1, 2, 1]], Framed@Grid[Partition[Map[keychart[#, ImageSize → 30] &, #[[All, 1]]], 12, 12, 1, {}], Spacings → {-0.05, 0.1}]} & /@ indexed[[{1, 9}]], Alignment → Left]](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In38.png)

![FindClusters[Join[indexed[[1]], indexed[[9]]], 2, DistanceFunction → (EuclideanDistance[Normalize@#1, Normalize@#2] &)][[All, All, 1]] FindClusters[Join[indexed[[1]], indexed[[9]]], 2, DistanceFunction → (EuclideanDistance[Normalize@#1, Normalize@#2] &)][[All, All, 1]]](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In39.png)

To quantify the clustering quality, we’ll use the Rand index (see the attached notebook for the implementation). Using the Rand index, a perfect partitioning gets a score of 1:

![]()

![]()

The 1 versus 9 cluster gets a score of about 78%:

![randclusterquality[{{1, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9}, {1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 9, 9, 9}}] randclusterquality[{{1, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9}, {1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 9, 9, 9}}]](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In42.png)

![]()

And the 1 versus 7 cluster gets a score of 0, because the clustering doesn’t help distinguish between the two people at all:

![randclusterquality[{{1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 7, 7}, {1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 7, 7, 7, 7, 7, 7}}] randclusterquality[{{1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 7, 7}, {1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 7, 7, 7, 7, 7, 7}}]](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In43.png)

![]()

Now, since there are only 42 people, let’s just use brute force and measure the quality for all 1,764 pairwise clusterings:

![clusterquality[data1_, data2_] := randclusterquality@ FindClusters[Join[data1, data2], 2, DistanceFunction → (EuclideanDistance[Normalize@#1, Normalize@#2] &)][[All, All, 1]] clusterquality[data1_, data2_] := randclusterquality@ FindClusters[Join[data1, data2], 2, DistanceFunction → (EuclideanDistance[Normalize@#1, Normalize@#2] &)][[All, All, 1]]](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In44.png)

![]()

![pairclusters = DeleteCases[Outer[{#1[[1, 2, 1]], #2[[1, 2, 1]]} → clusterquality[#1, #2] &, indexed, indexed, 1], HoldPattern[{i_, i_} → _], 2]; pairclusters = DeleteCases[Outer[{#1[[1, 2, 1]], #2[[1, 2, 1]]} → clusterquality[#1, #2] &, indexed, indexed, 1], HoldPattern[{i_, i_} → _], 2];](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In46.png)

![]()

The average cluster quality is about 67%:

![]()

![]()

Here’s the distribution of Rand quality scores:

![Histogram[Flatten[pairclusters][[All, -1]], 30, Frame → True, FrameStyle → Gray, FrameTicksStyle → Black, FrameTicks → {{Automatic, None}, {Automatic, None}}, PerformanceGoal → "Speed"] Histogram[Flatten[pairclusters][[All, -1]], 30, Frame → True, FrameStyle → Gray, FrameTicksStyle → Black, FrameTicks → {{Automatic, None}, {Automatic, None}}, PerformanceGoal → "Speed"]](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In49.png)

And here is the matrix of all pairwise cluster scores, where matrix element {i, j} gives the Rand score for the clustering of trials for person i and person j. Darker is a higher score, and white is a score of 0 (all elements along the diagonal are by definition 0 because it’s impossible to distinguish between two identical sets of trials):

![]()

![]()

![]()

(Note that the matrix isn’t perfectly symmetric—that’s because the ordering of data points given to FindClusters can change the results.)

You can see there are rows with lots of white cells. Those are individuals whose trials tend to cluster poorly against all the other peoples’. If we take the average score for each person, we can see the poorly clustering ones as low points:

![]()

![ListPlot[meanscoreperperson, Filling → Axis, PlotRange → {All, {0, 1}}, Frame → True, FrameTicks → {{Automatic, None}, {Automatic, None}}, FrameStyle → Gray, FrameTicksStyle → Black] ListPlot[meanscoreperperson, Filling → Axis, PlotRange → {All, {0, 1}}, Frame → True, FrameTicks → {{Automatic, None}, {Automatic, None}}, FrameStyle → Gray, FrameTicksStyle → Black]](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In54.png)

I suspect the poorly clustering people tend to be less consistent in their trials, which makes it harder for the clustering algorithm to find a clean partition. Let’s test that by quantifying a person’s typing consistency using a measure of the scatter of their trials in 6D space:

![]()

![pairscatter = Outer[{#1[[1, 2, 1]], #2[[1, 2, 1]]} → {ballradius[#1[[All, 1]]], ballradius[#2[[All, 1]]]} &, indexed, indexed, 1]; pairscatter = Outer[{#1[[1, 2, 1]], #2[[1, 2, 1]]} → {ballradius[#1[[All, 1]]], ballradius[#2[[All, 1]]]} &, indexed, indexed, 1];](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In56.png)

![]()

Now that we have a measure of scatter, we plot cluster score versus the average scatter for each pair:

![qualscatter = DeleteCases[Flatten[Map[# → {Mean[# /. pair2scatter], # /. indices2clusterquality} &, Table[{i, j}, {i, 1, 42}, {j, 1, 42}], {2}], 1], HoldPattern[{i_, i_} → _]]; qualscatter = DeleteCases[Flatten[Map[# → {Mean[# /. pair2scatter], # /. indices2clusterquality} &, Table[{i, j}, {i, 1, 42}, {j, 1, 42}], {2}], 1], HoldPattern[{i_, i_} → _]];](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In58.png)

![Labeled[ListPlot[qualscatter[[All, 2]], Epilog → {Red, Line[{{0, 0}, {1, 1}}]}, Frame → True, FrameTicks → {{Automatic, None}, {Automatic, None}}, PlotRange → {{0, 1}, {0, 1}}, PlotRangePadding → {{Scaled[0.05], Scaled[0.05]}, {Scaled[0.05], Scaled[0.05]}}, FrameStyle → Gray, FrameTicksStyle → Black], {Style["cluster quality", FontFamily → "Helvetica"], Style["scatter", FontFamily → "Helvetica"]}, {Left, Bottom}, RotateLabel → True] Labeled[ListPlot[qualscatter[[All, 2]], Epilog → {Red, Line[{{0, 0}, {1, 1}}]}, Frame → True, FrameTicks → {{Automatic, None}, {Automatic, None}}, PlotRange → {{0, 1}, {0, 1}}, PlotRangePadding → {{Scaled[0.05], Scaled[0.05]}, {Scaled[0.05], Scaled[0.05]}}, FrameStyle → Gray, FrameTicksStyle → Black], {Style["cluster quality", FontFamily → "Helvetica"], Style["scatter", FontFamily → "Helvetica"]}, {Left, Bottom}, RotateLabel → True]](https://content.wolfram.com/sites/39/2012/06/Keystrokes-In59.png)

There’s a clear trend here: pairs of people with lower scatter tend to have a higher cluster score (points lying above the red y = x line). So the ability of this method to identify you based on your typing style would require a certain amount of consistency in the way you type.

Using this fun little typing interface, I feel like I actually learned something about the way my colleagues and I type. The time to type two letters with the same finger on the same hand takes twice as long as with different fingers. The faster you type, the more your typing speed will fluctuate. The more your typing speed fluctuates, the harder it will be to distinguish you from another person based on your typing style. Of course we’ve really just scratched the surface of what’s possible and what would actually be necessary in order to build a keystroke-based authentication system. But we’ve uncovered some trends in typing behavior that would help in building such a system.

And hey, we just used the interactive features of Mathematica for real-time data acquisition! Being able to combine that with sophisticated functions like FindClusters for the subsequent data analysis allowed us to quickly find patterns and extract some meaningful conclusions about typing behavior from this dataset. Now I’m curious what other kinds of biometrics we can measure using Mathematica….

Download this ZIP file that includes the post as a CDF file, associated data files, and the code used above.

That was really cool! Thanks for the post!

Interesting study. However I think to conclude that “the time to type two letters with the same finger on the same hand takes twice as long as with different fingers” you’d need to assume that the sample typists all type using both hands and type particular keys with particular fingers?

It is still better to take the human out of the equation. The right circumstances to do that are arriving.

While I most frequently touch-type, I also tend to log into my computer at the beginning of lunch with a sandwich in one hand – so even under normal circumstances I have two different typing styles. But, more importantly what about emergencies? I had an accident and for a few days after I had to tell other people my password. And for several days beyond that I was dopy when I was trying to log-in and had a hard-enough time getting my name and password entered correctly, so I’m sure my typing was drastically different.

You need to take into account the fact that people may be typing slightly slower or faster at given times.

For example, I may type “wolfram” at my fastest speed during one session, and type it slower during another session.

What doesn’t (perhaps?) change however, is the distance (measured in time) between my keystrokes relative to the speed at which I’m typing.

I may type “wolfram” at a high speed, and “wolfram” at a low speed, the time measured between keystrokes is relative to speed, so all I would have to do is scale the average times relatively from slow to fast and the data should then begin to look the same again, regardless of how fast I’m typing.

A colleague of mine also noted that perhaps the user may change keyboard, then they will be typing differently for a while until they get used to it.

Physical factors will affect the data such as a hand injury etc.

Very interesting. This immediately made me wonder what could be accomplished by doing the same thing with mouse movements. When someone picks a target for their mouse on the screen, the speed at and path through which they reach that target will vary based on how quickly they respond to visual feedback and how well trained they are with the mouse. You might be able to model that as a control system and characterize individuals according to their mouse movements.