Driving CUDA over the Grid

There are two great principles to Mathematica‘s parallel computing design. The first is that most of the messy plumbing that puts people off grid computing is automated (messaging, process coordination, resource sharing, fail-recovery, etc.). The second is that anything that can be done in Mathematica can be done in parallel.

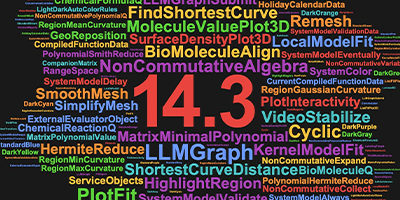

With this week’s release of gridMathematica 8, which adds the 500+ new features of Mathematica 8 into the shared grid engine, one nice example brings together both ideas—and that is driving CUDA hardware, in parallel, over the grid.

The use of GPU cards for technical computing is a hot topic at the moment, with the promise of cheap computing power. But the problem for both CUDA and OpenCL has been that they are relatively hard to program; they are well within the abilities of an expert programmer, but many of Mathematica‘s users are principally scientists or engineers, not programmers.

Mathematica 8 introduced a much improved workflow for controlling GPU cards by automating as much of the communication, control, and memory managements as possible (as detailed in this white paper Heterogeneous Computing in Mathematica 8). Exactly the principles we previously applied to CPU-based parallel computing.

So now we have a system that can automatically run any code in parallel, including code that automatically manages GPU hardware. And with the release of gridMathematica 8, we can automatically distribute those tasks over remote hardware. Here is how easy it can be.

Imagine that my organization has just purchased 50 regular PCs, each with a single 512 core GPU on board and each connected somewhere on my network. I want to evaluate a financial instrument a half a million times and I want it to be fast, so I want to use all of those 25,000 cores. (Note that this trivial example is only meant as an illustration.)

Step 1: Call my system administrator and ask for permission to use the hardware, and also ask that he installs gridMathematica and Wolfram Lightweight Grid Manager on the cluster. I will only need to do this once (if I have a good system administrator!).

Step 2: Tell my normal Mathematica installation to use this cluster when it is given parallel tasks. It takes three clicks and typing the number of kernels I want from each machine. If that sounds hard, watch a screencast I made doing just that. I will only need to do this once.

Step 3: Write the parallel, CUDA-enabled code to break the task up, distribute each subtask to each remote PC, place it onto its GPU card, run it there, take the result off the GPU card, return the values back to my local PC, re-allocate tasks (should a machine crash or otherwise go offline), and coordinate them into the result set. Sound hard? Here is the code.

Most of the code is describing the valuation parameters. All the complicated work is automated behind the first two lines. Notice how the code doesn’t say where the remote hardware is, or how many subtasks each machine has to run. I can share that code with someone with a different cluster of PCs, and it will scale automatically.

Of course, this is a trivial example, with entirely independent evaluations and predictable hardware. If I want to run my own CUDA or OpenCL programs, instead of built-in, high-level CUDA-enabled commands, then it takes one extra line of code plus my CUDA code. And if my PCs have more than one GPU, then there is one more line of code needed to allocate which GPU the task runs on. But little, in principle, changes for more complex real-world problems.

In a way, this blog post is similar to the way that we want technical computing to be. 650 words of ideas wrapped up in 7 lines of code. Look at any other solution for doing this and you will find the ratio of code required to be much higher.

Now if only I could get Mathematica to run looped calculations as fast as a C++ program. I used a Nest command to increase a variable’s value by unity at every stage of the loop, for a certain number of steps. It was slower than a C++ program by a factor of 100! That really made me sad.

“But the problem for both CUDA and OpenCL has been that they are relatively hard to program; they are well within the abilities of an expert programmer …”

… and almost all this expert has to program is just this very plumbing — not what I would call FEATURES! It’s almost entirely the low-level software-engineering tasks that these experts program, not really a concentration on utility design. The beauty of this in M is that it’s a GENERIC approach! The CUDA package is basically a generic interface where the plumbing has been outsourced.

In many cases it’s now a better solution not to program a C++ application that uses CUDA, but instead write a Java or C# application and use JLink or NETLink to call into CUDA with M. Uses only high-level languages, high-level interfaces, generic CUDA access, much higher development speed and less error-prone.

This is simply amazing! Parallel computing is finally made public!

Do I need to have Mathematica 8 installed on ALL other computers?

Or do I have to install gridMathematica 8 on each ?

If I need Mathematica 8 on each machine, it means that I have to buy a license for each computer I will use…?

@Rudro

To improve the performance of low-level programming, such as loops, you should learn as much as you can about the Compile function.

http://reference.wolfram.com/mathematica/ref/Compile.html

If you really need C code, then you can also use the Compile function to generate parallel C code, compile it and link it back into the kernel using the DLL link, all in one line of code. It gives you exactly C performance, but with a lot of the convenience of Mathematica.

Like everything else you can distribute this using gridMathematica. So you can use gridMathematica to distribute your Mathematica code, and compile it locally on each node (targeted to the local hardware and OS), run it there and retrieve. It would look something like

ParallelEvaluate[myfn=Compile[{x,_Real},myCode[x],CompilationTarget->”C”,Parallelization->True]];

ParallelTable[myfn,[x],{x,0,1000000}]

@Robert

You need to have a Mathematica Compute Kernel available for each machine but that is not the same as a license.

A typical Mathematica license currently includes 1 Control Kernel (required for controlling parallel computations) and 4 Compute Kernels. Each gridMathematica license includes 16 Compute Kernels. So for example a 5 process Mathematica license plus gridMathematica would have a total of 5 Control Kernels and 36 Compute kernels. One person could run a computation over 40 remote computers, or 5 people could each control 7 remote computers.

Not really, Samuel.

The bulk of these capacities were present in Mathematica 7. What’s new here is the GPU support, not the overall ideas.

For the AVERAGE person, in fact, Mathematica8 is a substantial step backwards in cluster computing.

Math7 allowed one to run it on a home cluster consisting of two or three old/family machines one had sitting around the house.

Math8 expressly forbids this, both in the license and technically in the way the software sniffs the network to look for “copies”.

To address Maynard Handley’s comments –

– Mathematica does not sniff the local network for other Mathematica installs.

– I haven’t put the version 7 and 8 license agreements alongside each other, but reviewing my emails on the changes we did make, none of them relate to this. You can do exactly what you describe with a Network license of Mathematica, but the Single Machine license is licensed only for installation on a single machine and has been for as long as I remember. The gridMathematica product is a network licensed product.

– There were changes to the way that passwords and activation happened as part of the process to automate this and upgrades through the user portal which do mean that you can no longer use the same Single Machine activation key many times.

distributed processing is my passion.Thanks for the compilation