Stephen Wolfram Discusses Making the World’s Data Computable

Wolfram Research and Wolfram|Alpha hosted the first Wolfram Data Summit in Washington, DC this September. Leaders of the world’s primary data repositories attended the summit, exchanging experiences and brainstorming ideas for the future of data collection, management, and dispersion.

In his keynote speech, Stephen Wolfram discussed the complex nature of gathering systematic knowledge and data together. He also talked about the creation of Wolfram|Alpha, how Mathematica helps with the challenges of making all data computable, and what we can expect moving forward. The transcript is available below.

Making the World’s Data Computable

Well, I should start off by admitting one thing.

This Data Summit was my idea.

And I have to say that the #1 reason I wanted to have it was so I could have a chance to meet you all.

So… thanks for coming, and I hope I will have a chance to meet you-all!

Well, I’ve been a collector and an enthusiast of systematic data for about as long as I can remember.

But in the last few years, I’ve launched into what one might think of as the ultimate extreme data project.

It’s actually something I’ve been thinking about since I was a kid.

The idea is: take all the systematic knowledge—and data—that our civilization has accumulated, and somehow make it computable.

Make it so that given any specific question one wants to ask, one can just compute the answer on the basis of that knowledge and data.

Well, every so often I’d think about this again. And it’d always just seem too big and too difficult. And like it was at least decades in the future.

But two things happened in my life.

The first was Mathematica.

I actually started working on its precursors in 1979, but in 1988 we finished the first version of Mathematica, and launched it.

And from the beginning, the idea of Mathematica was to be a language that could encode any kind of formal knowledge or process.

It covered traditional mathematical things. But it also covered in a very general way anything else that could be represented in any kind of symbolic form.

And in the course of two decades, we’ve developed in Mathematica an absolutely giant web of algorithms—all set up in a very coherent way, and all organized so that as much as possible that one might want to do can be automated.

Well, so if one could somehow corral all that knowledge and data about the world into computable form, we would have a very powerful engine that can compute with it.

But still, it seemed as if there was just too much stuff out there to actually be able to do this.

And really it took 25 years of basic science work, and a pretty major paradigm shift, for me to start thinking differently about this.

Back in the early 1980s, as a result of a convergence of several scientific interests, I’d started studying what one can call the “computational universe” of possible programs.

Normally we think of programs as being things we build for particular purposes; to perform particular tasks.

But I was interested in the basic science of what the whole universe of possible programs is like.

And when I started kind of pointing my “computational telescope” out into that computational universe of possible programs, I discovered an amazing thing—completely at odds with my existing intuition. I discovered that even very simple programs out in the computational universe can behave in ways that are in a sense arbitrarily complex.

Well, that discovery launched me on building what has become a whole new kind of science.

That I think explains some of the major secrets of nature. But that also starts us thinking differently about just what it takes to create—and represent—all sorts of richness that we see in the world.

And it was from this that I came to start thinking that in the first decade of the 21st century perhaps it wasn’t so completely crazy to start trying to make the world’s knowledge computable.

And the great thing was that our practical situation—in terms of practical technology, business success, and people—was such that we could actually give this a try.

And the result has been our very ambitious project Wolfram|Alpha—which we finally exposed for the first time to the world a little over a year ago.

I’m hoping most of you have had a chance to use Wolfram|Alpha.

But let me show you the basic idea.

The point is: you type in a question, however you think of it.

male age 50 finland life expectancy

Then Wolfram|Alpha uses its built-in knowledge to try to compute an answer.

Actually, really, to generate a kind of report about whatever you asked about.

Well, of course, Wolfram|Alpha is a very long-term—a never-ending—project.

But we’ve been steadily going further and further, adding more and more domains of knowledge.

red + teal

how many goats in spain

car production in spain

Well, I have to say that when I started on this project, I wasn’t at all sure that it was going to work.

In fact, I had all sorts of arguments for why it might not.

But I’m happy to say that after watching it out in the world for a year, I think it’s pretty clear that, yes, this is the right time in history.

With the whole tower of technology and ideas that we’ve assembled over the past 20 years or so—and everything that we can get from the outside world—it is actually possible to make knowledge computable.

But, OK, so what’s actually involved?

What’s inside? How does it work? And what’s coming in the future?

You know, Wolfram|Alpha is really quite a complicated thing. I’ve worked on some pretty complicated things in my life. But Wolfram|Alpha is in a different league from anything.

In terms of the number of moving parts that all have to work smoothly inside it. In terms of the sheer size of what has to be done. And in terms of the number of different kinds of expertise that are needed to make it all work.

But I guess one can divide what has to be done into four big parts.

First, there’s all the data—and curating it to the point where it’s systematically computable.

I’ll talk a lot more about that in a minute.

But after you’ve got the data… well, then to answer actual specific questions, you have to compute with it.

So you have to implement all those methods, and models, and algorithms that science and everything else have given us.

Well, then, you have to figure out how to communicate with humans. You have to be able to understand the questions people are asking.

To take the kind of natural language people enter, and turn it into something precise that can be computed from.

And then at the end, after you’ve done your computing, you have to figure out how to present the results with tables and plots and whatever, to make it maximally easy for humans to absorb.

And beyond that, of course, there are practicalities. You’ve got to reliably do all those crunchy computations all the time, and consistently deliver everything through the web or wherever.

But that’s just the kind of thing Mathematica is perfect for. And of course in the end Wolfram|Alpha is just a big—actually now about a 10-million-line—Mathematica program, together with lots of data, and webMathematica servers and so on.

And that’s what produces all those results that all those people around the world are now relying on us for.

Well, OK, so back to the data side of things, because that’s the big topic here.

You know, it’s been really interesting. Taking all the thousands of domains we’ve tackled. And setting them up so that they’re computable.

So one’s not just dealing with raw data. But with computable data. That can directly and automatically be used to answer questions people throw in.

So. What have we learned?

Well, the first lesson is: the sources really matter.

The idea that in the modern world you can just go to the web and forage for a bunch of data and then use it, is just plain wrong.

What we’ve found is that if you actually want to get things right—if you actually want to get the right answer, and you want to have something that you can really build on—then you have to start with good reliable data.

And usually the only way to get that is to go to definitive primary sources of data.

Which is exactly how we’ve come to meet many of you.

But OK, so what happens after we’ve identified great primary sources of data?

Well, just structurally getting the data into our system is usually trivial.

A matter of minutes to hours. I mean, Mathematica imports pretty much any conceivable format—including some very obscure and specialized ones.

And with a little more effort we can also hook up to pretty much any kind of real-time feed for data.

But that’s the easy part. Kind of the 5% part. The hard part—the 95% part—is getting that data so that it’s really connectable to other things—to other data, to linguistics, and to other computations.

Sometimes the original data that comes in is basically very clean. Sometimes it’s a mess. Sometimes it has to be carefully assembled from many different sources.

It’s different every time.

Of course, validating data is getting easier and easier for us.

Because when data from some new domain comes in, we have more and more existing domains against which we can correlate and validate it.

We’ve developed lots of data quality assurance methods.

For a while all this stuff really scared us.

I mean, for 24 years now we’ve worked on Mathematica, where there is pretty much always a precise formal definition of whether a result is correct or not.

Well, over the years I think we’ve developed some pretty good and rigorous ways of testing that kind of correctness.

But of course, with most data it’s a more complicated story.

Still, we’ve been rather successful at constructing what amount to more and more sophisticated “theorems” that pieces of data must satisfy, or at least models that data should probabilistically satisfy.

And we’re then automatically able to test all these, even potentially on a real-time feed.

Well, OK, so let’s imagine that we’ve managed to get data to the point where it’s validated as being clean.

Then what?

Well, the next big step for us is usually linguistics.

Usually there are lots of entities in the data. Cities. Chemicals. Animals. Foods. Monuments. Whatever.

Well, then we have to know: how will people refer to these things?

In some domains—for some types of entities—there are pre-existing standardized backbones, perhaps established by standards bodies.

That give us a definite, canonical, labeling scheme for all entities of that type.

But often we have to invent a scheme to use. Because we always need a definite systematic way to refer to entities so that we’re able to compute with them.

But OK. So that’s fine inside our computer. But how do we connect this with the way that actual people refer to entities?

There may be a standardized scheme that gives us New York City. But we need to know that people also call it “New York”, or “NYC”, or “The Big Apple”.

And this is where we have to collect a different kind of data. In effect, we have to systematically collect “folk information”.

And this is a place where the web—and things like Wikipedia—are really useful.

We need as big a corpus as possible to be able to figure out how people really name things.

Well, it depends on the domain, but this “linguistics” step is usually quite difficult, and time consuming.

And since it’s representing the way humans refer to things, it pretty inevitably requires the involvement of humans. And for specialized domains, it requires domain experts.

It’s surprisingly common that we find ways that everyone in a particular domain refers to something, but it’s some kind of slang or shorthand that’s basically never written down.

And here’s another issue: people for example in different places can use the exact same term to refer to different things.

Whether it’s the same currency symbol getting used for lots of currencies. Or whether it’s the same name getting used for lots of different cities.

So how does one disambiguate? Well, in Wolfram|Alpha we typically know where a user is, from geoip, or from some kind of mobile device location service.

And when we combine that with actual detailed knowledge that we have, we can use that to disambiguate.

Like for cities, for example. Where we know the distance to the city. The population of the city. Whether the city is in the same country. And we have kind of a “fame index”, that’s based on things like Wikipedia page size and links, or perhaps home page web traffic.

Well, we can combine all those things to figure out which “Springfield”, or whatever, someone is talking about. And actually, when we look at actual user behavior on the Wolfram|Alpha website, I’m amazed at how often we manage to get this exactly right.

Well, OK. So one difficult issue is the naming of entities—linguistics for entities.

But an even more difficult issue is linguistics for properties of entities.

There tends to be a lot more linguistic diversity there.

Like: what is the linguistics for “average traffic congestion time” for a city?

How do people talk about that?

Well, we have some pretty sophisticated ways to take apart freeform natural language input.

It has some elements of traditional parsing, but it’s mostly something more complicated.

Because when, for example, people walk up to a Wolfram|Alpha input field, they don’t type in beautifully formed sentences.

They just type in things as they think of them. With some little fragments of grammar, but overall something that I think is much closer to the “deep structure” of language—much closer to undigested human thinking, presented in linguistic form.

There’s some regularity and structure. And we use that as much as we can.

But ultimately one has to know the raw pieces—the raw words and phrases—that people use to refer to a particular property.

Often there are a surprising number of them. That are quite difficult to identify.

Though it’s getting easier for us, both because of automation and human procedures.

Well, a lot of raw data can be represented in terms of entities and properties, perhaps with some attributes or parameters thrown in.

But that’s only the beginning. Beyond the data one has to represent operations and sometimes what amount to pure concepts.

And one of the big things for us in Wolfram|Alpha is that we get to use the general symbolic language in Mathematica to represent all of these kinds of things—in a nice uniform way.

Of course, translating from human utterances in natural language to that internal symbolic form is really difficult.

And actually, it was one of the many reasons I thought Wolfram|Alpha might simply not be possible.

I mean, people have been working on trying to have computers understand natural language for nearly 50 years.

But one big advantage we’ve had is that we’re trying to solve kind of the inverse of the usual problem.

Usually, people have lots of natural language text, and want to go through and “understand” it. We’ve instead got lots of underlying computations that we know how to do, and our goal is then to take some short human utterance, and see if we can map that onto a computation that we can do.

I’m not sure if it’s really an easier problem. But what I do know is that—particularly with methods that come from the new kind of science I’ve studied—we’ve managed to get a remarkably long way in solving the practical problem.

I should say that even the difficulty of entity linguistics pales in comparison to the general problem.

And it’s particularly bad when one’s dealing with “expert” notation, whether it’s little two-letter names for units, or weird shorthand notation in chemistry, or math, or mechanical parts. Or strange special-case linguistic constructs, that people understand because of some quirk of linguistic history.

But if one’s really going to get the most out of data, these are the problems one has to solve.

But OK, so let’s imagine that we’ve figured out what a particular question is.

What does it take to answer it?

Sometimes pretty much one just has to look up the value of some particular property of some entity.

But the vast majority of the time it’s not that raw lookup that’s what people really want.

They want to take one value—or perhaps several values from several different places—and do some computation with them.

Sometimes the computation is pretty trivial. Like changing units or something. But often it’s much more elaborate.

Often the raw data is almost just a seed for a computation. And one has to do the whole computation to get an answer that’s really what a person wants.

It’s one thing just to look values up in a database. But to get actual answers to real questions takes a whole additional tower of technology—that’s what we’ve effectively been building all these years in Wolfram|Alpha.

Well, OK, but back to raw data.

In the past when one went to some data source one would typically extract just a few pieces of data, or perhaps some definite swath of data.

And then to know what the data meant, one could go and read through some description or documentation.

But if one’s going to compute with data—if one’s going to make automatic use of the data—one has to understand and encode its meaning in some much more systematic way.

At a very simple level, what units is some value really measured in? If one’s going to compute things correctly, one has to know that something is “per year”; it’s not good enough just to have a raw number.

And then, what does that “consumption figure” or that “lifetime” really mean? One has to know, correctly and precisely, if one’s going to be able to compute with it.

Well, so how does one organize getting all this information?

I don’t think we could have done the Wolfram|Alpha project if it wasn’t for the fact that within our rather eclectic company, we have experts on a lot of different topics.

Almost every day we’re hitting our internal “who knows what” database and finding that we have an in-house expert on some obscure thing.

But more than that, it’s been really nice that it’s so easy for us to connect with outside experts, and to reach the world expert in almost anything.

And by now we have quite a procedure worked out—that optimizes everybody’s time—for getting the information we need from experts.

In our pipeline for dealing with data, it’s not good enough for us just to have data. In order to compute from data, we actually have to understand in a formalizable way exactly what the data means.

And, then, of course, we actually have to do the computations.

We’ve set a pretty high standard for ourselves. We want to get the best answers that are possible today, bar none. We want to use the best possible research-level methods in every possible field.

And I have to say that the “getting it right” problem is very nontrivial. It’s often easy to get things “sort of right”. But it’s a lot harder to actually get it right.

We have one huge advantage: Mathematica. Because when some method for computing something calls for solving a differential equation, or minimizing some complicated expression, Mathematica just immediately knows how to do it. It’s sort of free for us.

So if we can only manage to find out—from some appropriate expert, or whatever—what the best method for computing something is, then in principle we have a straight path to implementing it.

In practice, though, the biggest problem is getting the method explicit enough. It’s one thing to read about some method in a paper. It’s another to have it nailed down precisely enough that it can become an actual algorithm that one can run.

And that’s usually the place where there’s the most interaction with experts. Back and forth validating what things really mean—and often discovering that there are actually pieces that have to be invented in order to complete a particular algorithm.

Well, OK. So let’s say we’re successfully able to compute things.

Often we can actually do a lot of different computations to respond to a particular query.

So then there’s a question of what to actually present in the end, and how to present it.

And I’m actually rather proud of some of the “devices” that we’ve invented for presenting things.

I guess all those years of visualization development, informational websites and expository writing—not to mention my own experiences with algorithmic diagrams in things like the NKS book—are somehow paying off.

But still, there’s a lot of judgment needed. A lot of expert input. About what’s the most important thing to present. And about how to avoid confusions, even when people look at results quickly.

Well, by now we’ve developed a lot of algorithms and heuristics for these things. But it still always requires human input.

Actually, it’s usually started from me. Designing ways to present different kinds of information, and ways to pick out what’s most important.

But we steadily turn the things into procedures, that can either be completely automated, or at least used through some streamlined management process.

One thing that’s often difficult is what to do when there’s only so far that current data or methods let one go.

We try to word things to set the expectations properly—and we work hard on those footnotes that try to explain why results might not correspond to reality. “Current predicted values, excluding local perturbations”, and so on.

Then there are issues like rankings when we can’t know whether we have all of something.

It’s reasonable to ask for the largest rivers. It’s not reasonable to ask for the smallest ones. Though of course people do.

Anyway, so, we’ve built quite a pipeline for curating data—and for getting it to the point where it’s computable, and where we can do all sorts of wonderful things with it.

One part of this is dealing with specific data by itself. Another part is connecting that data to every other kind of data.

That’s part of what it really means for data to be computable.

But, OK, so how do we hook data together?

At a very simple level, values somewhere may obviously correspond to other kinds of data—or may at least be measured in particular units that obviously relate to some other kind of data.

But in general there can be complicated relations between different kinds of entities, and properties, and data.

I’m a big one for global theories. But as we’ve built out Wolfram|Alpha, I’ve actually had a principle of always starting off avoiding global theories.

We’ve steadily built things in particular domains, setting up relations and ontologies in those domains. And only gradually seeing general principles, and building up more general frameworks that go across many domains.

If I look at our current frameworks—and the whole ontological system that’s been emerging—I have to say I don’t feel bad about not having figured it all out up front.

There are lots of parts of it that just aren’t like those ontological theories that philosophers have come up with, or even that people have imagined should exist in a semantic web.

Most often the issue is that what’s been imagined is somehow static. But in fact many important relations are defined dynamically by computation.

And fortunately for us, with the general symbolic language we have in Mathematica, it’s immediately possible for us to represent such things.

You know, it’s often been surprising to me just how powerful this concept of computable data is.

I mean, there are lots of places where I’ve thought: well, we can get this or that kind of data.

But it’s just dead, raw, data. How can one compute from it?

Take people, for example. What can one compute from information about a person?

Well, then you start realizing that with multiple people you can compute timelines. You can see how much overlap there was. When someone’s important anniversary is. Whatever.

Or one can thread information about people back to cities. It’s kind of fun to go to Wolfram|Alpha and type in a city, and see what famous people were born there.

Or one can look at well-known peoples’ names, and see how they relate to the time series of popularity for those baby names. Then use mortality curves to see the ages today of people given those names.

I could go on and on.

It’s really been fascinating. And as we cover more and more domains, it’s been accelerating. We’ve been able to make richer and richer connections.

And when one really has computable data, one effectively has a system that can just automatically start to find connections between things—and in essence just automatically make new discoveries.

You know, it’s interesting. At the beginning, I had no real idea that Wolfram|Alpha was even possible.

We took our best shot at setting up general frameworks and general principles. And fortunately they weren’t bad. But as we actually started to pour knowledge into the system, we were able to see lots of new possibilities, and new issues.

And we’ve actually been rather successful, I think, at balancing the pragmatic work of just adding lots of detailed functionality, with longer-term systematic construction of much more general frameworks.

I always tend to adopt that kind of portfolio approach to R&D. With lots of short-term activity, but a range of longer-term projects too.

And for example in Wolfram|Alpha we’re just installing the fourth level of our main linguistic analysis system. All the previous levels are still in there and running. But the new level is more general, and covers a broader area, can handle all sorts of more complex inputs, and is much more efficient.

For our data system, too, we’ve had several generations of functionality.

Right now, we’re starting to install what we call our “data cloud”—that’s in a sense a generalization of a lot of previous functionality.

At the lowest level, we typically use databases to store large-scale data. But different kinds of data call for different kinds of databases. Sometimes it’s SQL. Sometimes it’s geospatial. Sometimes it’s a graph database. And so on.

But our data cloud—among other things—provides a layer of abstraction above that. It defines a symbolic language that lets one transparently make queries about data across all those different types.

And it lets us efficiently ask all sorts of questions about data—with data from all possible domains automatically and transparently being combined.

OK, so here’s another issue.

Data gets stale. One can curate data at one time. But one always has to plan for re-curation.

Often it’s actually easier if the data is coming from a feed—every second or every minute—than if the data appears every quarter or every year, or at random times, perhaps as a result of world events.

There are always issues about data that we’ve modified, and then a new version of what’s underneath comes along. But we’ve now got pretty streamlined systems for all of this, and our data cloud will make it even better.

Well, OK, what if there’s some data that one needs, but it just doesn’t exist in any organized form?

We’ve been involved over the years with assembling a lot of mathematical and algorithmic data.

And in that area, you can just go out and compute new data. Which we’ve done a lot.

Sometimes there’s data that you can go out and measure automatically.

With lab automation or scanning or whatever. And we’ve been increasingly gearing up to do that in various domains.

But sometimes there’s data that has been found, but has never been aggregated or systematized—and it’s scattered across all sorts of documents—or worse is common knowledge if you manage to ask the right person.

Well, if it’s in documents, then you can think about using natural language processing to pull it out.

And believe me, we’ve tried that. In all sorts of ways. But here’s what seems to happen. Every single time.

You’ve got 10 items to look at. You do 7 of them. They work great. You’re thrilled. You think: finally we can just use NLP for everything. But then there’s one that’s a bit off. And then there’s one that’s complete nonsense. Completely wrong.

And then you realize the following. If you do a really good job with NLP, then perhaps you can pick out a correct result 80% of the time. But that means you’ll still be wrong 20% of the time.

And the crippling issue is that you can’t tell which 20%. So you’re basically back to square one—with humans having to look at everything.

And, OK, we’ve got a few hundred enthusiastic humans within our own organization.

But one of the really nice things that’s happened with Wolfram|Alpha being out and about in the world is that lots of people have emerged who are very keen to help us. To help trying to push forward this goal of making the world’s knowledge computable.

And the result has been that we’ve been able to assemble quite a network of volunteers.

So far we’ve got a few hundred active volunteers specifically focused on data. And doing a variety of different things. Sometimes cleaning data. Or filling in missing data. Sometimes for example assembling things that are common knowledge, but only in a specific country.

We’ve been trying to work out how to make the best use of volunteers, especially for data curation.

And actually, just last week we’ve dramatically expanded our volunteer data curation mechanism.

We want to know who all our volunteers are. And we try to work with them.

And what we’re now planning to do is to have a series of “data challenges”, having volunteers focus on particular areas they’re interested in and knowledgeable about. Right now some of our top challenge areas are data about fictional characters, coinage of countries, and video games.

In general, one of the fertile areas is extracting structured data from text—perhaps with hints from NLP. Another is actually going out and measuring things.

We’ve found there’s quite a wide spectrum of volunteers, often very educated and experienced. One quite large component is retired people. Another—relevant for tasks that don’t need as much experience—is high-school students, who can often get some form of credit for volunteering for Wolfram|Alpha.

Data curation isn’t for everyone. It’s a different kind of activity from writing text for Wikipedia. Or driving streets for OpenStreetMap. But we’ve found there are a lot of very capable and enthusiastic volunteer data curators out there, and we’re expecting to dramatically scale this effort up.

You know, it’s interesting. There’s a vast universe of data out there.

And in the Wolfram|Alpha project, our goal is to make as much as possible of it computable.

At the beginning it was a little daunting. You’d go into a large reference library, and see all these rows of shelves.

But as time has gone on, it’s become much less daunting. And in fact, as one looks around the library, one can see that most of that data is now in Wolfram|Alpha—in computable form.

Of course, we’ve got a giant to-do list. And every single day, as we analyze what our users try to do with Wolfram|Alpha, we’re generating—in some cases almost automatically—more and more of a to-do list.

At the beginning, we wondered just how well we could scale all our processes up. But I have to say that that’s worked out better than I’d ever imagined.

Many things get easier as one has more domains. It’s easier to validate new data. It’s easier to find precedents within the system for how to do things.

Linguistically there can be some challenges: with new data coming in, there can be names that clash with existing names. And in fact within our data curation operation, there’s a definite “declashing” function—that’s partly automated, and partly again relies on human judgment.

Well, OK, so we’re working hard to grow Wolfram|Alpha. In fact, every single week since we launched last May, we’ve been putting a new version of our codebase into production. For us internally, there’s actually a new version every hour. And it’s a pretty complex adventure in software engineering to validate and deploy the whole system each week.

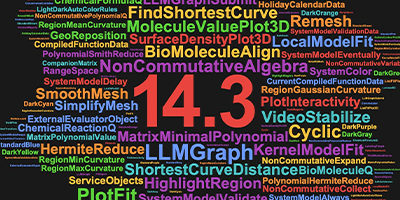

But it’s pretty exciting for us to see the progress in the public functionality.

Well, OK. So we’re building this big Wolfram|Alpha thing. A lot of technology and content and structure.

But what are we really going to do with it?

Well, I should say that I consider us just at the very beginning with that. A lot will unfold over the next few years. Some of it is already in our plans; some of it I think is going to be wonderful stuff that we absolutely don’t realize yet.

But obviously right now, a central aspect of Wolfram|Alpha is the Wolfram|Alpha website, which lots of people use every day.

It’s been interesting to track that usage over the last year.

It’s definitely getting more sophisticated. As people realize what Wolfram|Alpha can do, they try more and more.

At the beginning, lots of people thought that Wolfram|Alpha was like a search engine—picking up existing textual phrases from the web.

But gradually people seem to have realized that it’s a different—and complementary—kind of thing. Where the system has built-in computational knowledge, which it uses to compute specific answers to specific questions.

And, you know, these days the vast majority of questions that Wolfram|Alpha gets asked are ones that it has never been asked before. They’re ones where it has no choice but to figure the answer out.

Oh, and by the way, these days the majority of queries to Wolfram|Alpha give zero hits in a search engine; they don’t ever appear literally on the web.

So the only way to get an answer is to actually compute it.

Well, OK. But the website is just a piece of the whole Wolfram|Alpha story.

Because what Wolfram|Alpha introduces is really a general technology—the arrival of what I call “knowledge-based computing”.

And the website is just one example of what Wolfram|Alpha technology makes possible.

But our goal is to make Wolfram|Alpha technology ubiquitous—everywhere. To deliver computational knowledge whenever and wherever it’s needed.

We’ve got a pretty extensive road map of how to do that.

Let me mention a few pieces that are already out and about in the world.

A while ago we announced a deal with Microsoft for the Bing search engine to use Wolfram|Alpha results.

In a sense combining search with knowledge.

Last year we introduced a Wolfram|Alpha iPhone app—and earlier this year an iPad app.

On the iPad you may have seen The Elements book, produced by Touch Press—a spinoff from our company—that’s getting featured in a lot of iPad ads.

The Elements has the first example of Wolfram|Alpha ebook technology, where computational knowledge—and data—can be directly delivered inside the ebook from Wolfram|Alpha.

Well, a few weeks ago we also rolled out Wolfram|Alpha Widgets, which allow people to make their own custom interfaces to Wolfram|Alpha.

In fact, in under a minute you can make something like a web calculator that calls on Wolfram|Alpha—and then deploy it on a website, a blog, a social media site, or whatever.

And already people have made over a thousand of these widgets.

I might say that all the things I’ve just mentioned are built using the Wolfram|Alpha API. And we’re soon going to be rolling out several levels of API.

That allow you to integrate Wolfram|Alpha in pretty much arbitrary ways into websites, or programs, or whatever.

Well, I have to say that the things I’ve just listed represent a pretty small fraction of all the ways that are under development for delivering Wolfram|Alpha technology.

We’re having a great time taking the core technology of Wolfram|Alpha, and seeing more and more interesting places where it can be deployed.

A lot of it connects to outside environments. But there also some pieces that build in interesting ways on the other parts of the technology stack that we as a company have built.

An example is CDF.

CDF—the computable document format—is a way that we’ll be releasing soon of very easily creating dynamic, interactive, documents that immediately build on all the algorithms and automation that we’ve been setting up in Mathematica for the past 24 years.

There’s a precursor of CDF that drives our Demonstrations Project. Where people contribute interactive demonstrations of all sorts of things. 6000 of them so far.

Well, one of the things one can do is to get Wolfram|Alpha to produce CDF.

Which means that the things one’s generating are immediately interactive.

OK. Another critical piece of our technology stack is Mathematica itself.

It’s interesting to compare Mathematica with Wolfram|Alpha.

Mathematica is a precise language, in which with sufficient effort, one can build up arbitrarily complex programs.

Wolfram|Alpha, on the other hand, just handles any old freeform input, and lets one handle a very broad collection of one-shot computations.

Well, what happens if one tries to bring them together?

OK… let me show something we have in the lab today.

This is a Mathematica session.

We can just type ordinary precise Mathematica language stuff into it.

But now there’s another option too: we can type freeform input… then we can have Wolfram|Alpha interpret it.

Effectively we’re programming Mathematica using plain English.

And it’s translating that plain English into precise Mathematica language. Which we can then build with.

Well, OK, so we can also access data via this freeform input.

Then compute with the data in Mathematica.

Actually, there’s data that’s quite immediately accessible in Mathematica.

Of course, Mathematica doesn’t just deal with numeric or textual data.

Its symbolic language can handle absolutely any kind of data. Like image data, for example.

Well, in the future it’s going to be possible to get any data that exists in Wolfram|Alpha directly into Mathematica.

And in Mathematica you can not just generate static output. You can also use CDF technology to generate dynamic output.

You can take data and immediately do computations, and produce dynamic reports, or presentations, or whatever.

It’s a pretty powerful setup. And we’re always keeping up to date on all the different ways to deploy things with Mathematica.

I might say that within Mathematica, I think we’ve really developed some pretty spectacular ways for handling data.

Which we get to use in building our curation, computation and visualization pipelines in Wolfram|Alpha.

But which one can also use quite separately, for example doing analysis of large—or small—sets of data.

And it’s neat to see how our whole web of algorithms can contribute. And how the symbolic character of the language contributes.

For example, in the last few years we’ve made some breakthroughs in ways to do modeling and statistics—that should be rolling out in versions of Mathematica soon.

Well, OK, so in the world of Wolfram|Alpha, there’s another important thing that’s been going on.

Using our data curation—and computation—capabilities, as well as Mathematica, to do with data inside organizations what we’re doing with public data on the Wolfram|Alpha website.

I must say that I thought this kind of custom Wolfram|Alpha business would take longer to take off. But ever since Wolfram|Alpha launched, we’ve been contacted by all sorts of large organizations who want to know if we can get Wolfram|Alpha technology to let them work with their data.

And our consulting organization has been very busy doing just that—and deploying Wolfram|Alpha appliances that sit inside peoples’ data centers to deliver Wolfram|Alpha capabilities.

You know, it’s pretty interesting: with the linguistic capabilities we have, and the whole data curation pipeline we’ve built, we’re able to do some pretty spectacular things with peoples’ data.

And there’s a lot more to come along these lines.

Well, OK, what about people—like many of you—who have all sorts of interesting data that you’ve already organized in one way or another.

Well, obviously, we’re already working with many of you to include that data in the standard, public, version of Wolfram|Alpha—and to be able to deploy it through all the channels that Wolfram|Alpha technology reaches, and will reach in the future.

But OK. What about all that wonderful proprietary data that perhaps is the basis for your whole business?

We want to figure out a way to work with that so that everyone—including consumers—comes out ahead.

These are early days. But let me share with you some ideas for how we think this ecosystem could develop.

And I should say that we’re very keen to get input and suggestions about all of this.

Here’s the basic point, though. We’d like to figure out how best to take wonderful raw data, and make it computable, combine it with all sorts of other data, and deploy it in every possible way.

I mentioned our data cloud system. Well, the data cloud is going to have a very general API that lets people upload data to it.

And if people want to, they can just download data through the API too.

But the much more interesting thing is to have that data made computable, and flowed through Wolfram|Alpha.

So that it’s pulled in in exactly the way it’s needed in answering peoples’ particular questions.

And it’s combined with all the other data that’s already in Wolfram|Alpha.

And deployed through every channel where Wolfram|Alpha can be deployed.

So what we’re working toward is having a way that organizations can sign up with us to flow their data into our data cloud.

Then we’ll set things up so that that data is computable, and is connected to everything else.

Then we’ll be able to have the data available—in fully computable form—in lots of venues.

Examples might be widgets or apps. Which make use of the full computational power of Wolfram|Alpha, but can be put on a website, or sold in an app store, by the original data provider, or through whatever channels they want.

And within Wolfram|Alpha itself, we’re planning to have “stubs” that show people when additional data—and computations—are available. And then let people pay to get access to that additional data, sharing the revenue with data providers.

We’re obviously happy to see people starting to be aggregated providers of raw data. That makes the integration—and perhaps the distribution—job a bit easier.

I see the main challenge right now as streamlining our data curation pipeline to make it realistic to take in so much additional data.

But we’re keen to start trying it out with people. And given everything we’ve already done with Wolfram|Alpha it’s absolutely clear that it’s ultimately going to work.

Well, OK. So what happens when we can get all these different forms of data set up to be computable in one place?

There are all sorts of exciting things that progressively become possible.

You know, there have been some traditional areas with huge spans of computable stuff. The most obvious is traditional mathematical science.

But when we have enough domains strung together, there are all sorts of new areas where there are starting to be huge spans of computable stuff.

An example is in biomedicine. Where there’s sort of a span going from medical conditions down to genomes. Different kinds of data at each level. But really stringing it together in a computable way will make some exciting things possible.

I just got my own whole 2 x 3 gigabase genome—and pieces of it will probably be in Wolfram|Alpha before too long. And it’s a fascinating thing seeing how with enough types of data one should be able to start making real conclusions from it.

You know, as we get data in all these different areas, it’s really clear how limited our current modeling methods are.

With NKS, one of the big ideas is not just to use standard mathematical equations to model things—but to create models by setting up arbitrary programs.

Traditional statistics tends to be about getting a formula, then tweaking parameters to make it fit observed data.

NKS gives a much more general approach—and we’re exploring how much we can automate it to discover models in all sorts of areas, that we can then for example use for validation, or interpolation, or prediction.

You know, it’s interesting to imagine where all this will go. Particularly with all the new sources of data that are starting to emerge in the world.

I think the total amount of data that each of us humans produce is going to go up and up. Because I’ve been a data enthusiast for a long time, I’ve been recording a lot of my own data. Like logging every keystroke I type for over 20 years. And more and more things like that.

And I can see that there’s a whole area of personal analytics, combining that kind of data with general data about the world.

And helping individual people to make decisions and so on.

You know, it’s been a trend in the world for a long time to replace rules of thumb for figuring out what to do by actual computations.

And as we get a better and better handle on data, we’re more and more able to do that.

You know, I’m something of a student of history. And I think it’s pretty interesting to see the role that data—and computation from it—has played over the course of time.

I hope you had a chance to see the timeline poster we just assembled about this.

We had a lot of fun making this. But I must say I found it very educational, and actually rather inspirational.

I’d always had the impression that data and the systematization of knowledge were important drivers of history.

But assembling all this together really drove this point home for me.

What we do with data—and the computation it makes possible—is fundamentally important for the progress of our civilization.

And I think that in these years we’re at a critical and exciting point, where—thanks among others to so many of you and your organizations—we have successfully assembled a huge swath of data.

And we’re now at the point where we can use it to take the knowledge of our civilization, and let everyone use it to compute and get answers.

I suspect that we will look back on these years as the ones where systematic data really came of age—and made possible true computational knowledge.

It’s exciting to be part of it. We have both great opportunity and great responsibility.

But this is a fascinating intellectual adventure. And I’m pleased that we could all be here today to meet and share our directions in these exciting times.

Thank you.

Merci à toute l’équipe de Wolfram Research pour ce grandiose projet de mise en commun de données. Espérons que les réticences au partage seront surmontées et que cette vision d’avenir qui incarne une grande générosité intellectuelle primera sur le cloisonnement et l’esprit de chapelle !

Nous sommes tous très excités (maîtres et élèves) par les étonnantes possibilités de Wolfram Alpha et attendons avec impatience la sortie de CDF.

BabelFish says that first comment @9:59 says:

“Thank you with all the team for Research Wolfram for this imposing project of pooling of data. Let us hope for how the reserves with the division will be surmounted and that this vision with a future which incarnates a great intellectual generosity will take precedence over the bulk-heading and the cliquishness! We all are very excited (Masters and pupils) by the astonishing possibilities of Wolfram Alpha and await the exit of CDF impatiently.”

If Wolfram could combine Alpha & Babel Fish you guys and gals could rule the world, break right through the bulk-heading and the cliquishness!

Cool article you got here. I’d like to read something more concerning that theme. The only thing it would also be great to see here is some pics of any devices.

Katherine Watcerson

Is Stephen’s keynote speech online?

How do you deal with politically-biased errors in data provided by governments and/or public organizations?

Like, for example, crime rate, unemployment rate, etc?