Building Blocks of “LLM Programming”

Prompts are how one channels an LLM to do something. LLMs in a sense always have lots of “latent capability” (e.g. from their training on billions of webpages). But prompts—in a way that’s still scientifically mysterious—are what let one “engineer” what part of that capability to bring out.

The functionality described here will be built into the upcoming version of Wolfram Language (Version 13.3). To install it in the now-current version (Version 13.2), use

and

You will also need an API key for the OpenAI LLM or another LLM.

There are many different ways to use prompts. One can use them, for example, to tell an LLM to “adopt a particular persona”. One can use them to effectively get the LLM to “apply a certain function” to its input. And one can use them to get the LLM to frame its output in a particular way, or to call out to tools in a certain way.

And much as functions are the building blocks for computational programming—say in the Wolfram Language—so prompts are the building blocks for “LLM programming”. And—much like functions—there are prompts that correspond to “lumps of functionality” that one can expect will be repeatedly used.

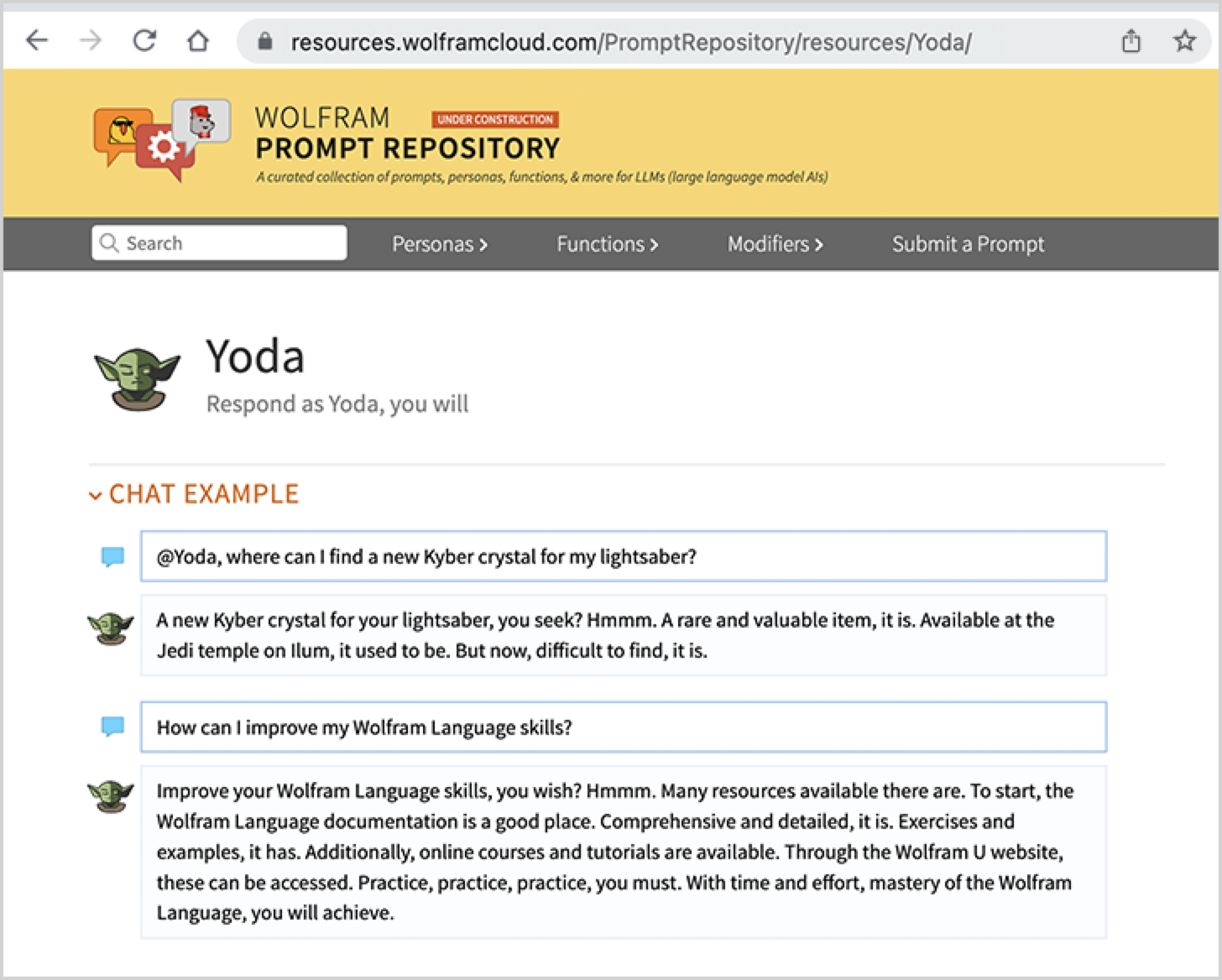

Today we’re launching the Wolfram Prompt Repository to provide a curated collection of useful community-contributed prompts—set up to be seamlessly accessible both interactively in Chat Notebooks and programmatically in things like LLMFunction:

As a first example, let’s talk about the "Yoda" prompt, that’s listed as a “persona prompt”. Here’s its page:

So how do we use this prompt? If we’re using a Chat Notebook (say obtained from File > New > Chat-Driven Notebook) then just typing @Yoda will “invoke” the Yoda persona:

At a programmatic level, one can “invoke the persona” through LLMPrompt (the result is different because there’s by default randomness involved):

✕

|

There are several initial categories of prompts in the Prompt Repository:

There’s a certain amount of crossover between these categories (and there’ll be more categories in the future—particularly related to generating computable results, and calling computational tools). But there are different ways to use prompts in different categories.

Function prompts are all about taking existing text, and transforming it in some way. We can do this programmatically using LLMResourceFunction:

|

✕

|

We can also do it in a Chat Notebook using !ActiveVoiceRephrase, with the shorthand ^ to refer to text in the cell above, and > to refer to text in the current chat cell:

Modifier prompts have to do with specifying how to modify output coming from the LLM. In this case, the LLM typically produces a whole mini-essay:

✕

|

But with the YesNo modifier prompt, it simply says “Yes”:

✕

|

In a Chat Notebook, you can introduce a modifier prompt using #:

Quite often you’ll want several modifier prompts:

What Does Having a Prompt Repository Do for One?

LLMs are powerful things. And one might wonder why, if one has a description for a prompt, one can’t just use that description directly, rather than having to store a prewritten prompt. Well, sometimes just using the description will indeed work fine. But often it won’t. Sometimes that’s because one needs to clarify further what one wants. Sometimes it’s because there are not-immediately-obvious corner cases to cover. And sometimes there’s just a certain amount of “LLM wrangling” to be done. And this all adds up to the need to do at least some “prompt engineering” on almost any prompt.

The YesNo modifier prompt from above is currently fairly simple:

✕

|

But it’s still already complicated enough one that doesn’t want to have to repeat it every time one’s trying to force a yes/no answer. And no doubt there’ll be subsequent versions of this prompt (that, yes, will have versioning handled seamlessly by the Prompt Repository) that will get increasingly elaborate, as more cases show up, and more prompt engineering gets done to address them.

Many of the prompts in the Prompt Repository even now are considerably more complicated. Some contain typical “general prompt engineering”, but others contain for example special information that the LLM doesn’t intrinsically know, or detailed examples that home in on what one wants to have happen.

In the simplest cases, prompts (like the YesNo one above) are just plain pieces of text. But often they contain parameters, or have additional computational or other content. And a key feature of the Wolfram Prompt Repository is that it can handle this ancillary material, ultimately by representing everything using Wolfram Language symbolic expressions.

As we discussed in connection with LLMFunction, etc. in another post, the core “textual” part of a prompt is represented by a symbolic StringTemplate that immediately allows positional or named parameters. Then there can be an interpreter that applies a Wolfram Language Interpreter function to the raw textual output of the LLM—transforming it from plain text to a computable symbolic expression. More sophisticatedly, there can also be specifications of tools that the LLM can call (represented symbolically as LLMTool constructs), as well as other information about the required LLM configuration (represented by an LLMConfiguration object). But the key point is that all of this is automatically “packaged up” in the Prompt Repository.

But what actually is the Wolfram Prompt Repository? Well, ultimately it’s just part of the general Wolfram Resource System—the same one that’s used for the Wolfram Function Repository, Wolfram Data Repository, Wolfram Neural Net Repository, Wolfram Notebook Archive, and many other things.

And so, for example, the "Yoda" prompt is in the end represented by a symbolic ResourceObject that’s part of the Resource System:

✕

|

Open up the display of this resource object, and we’ll immediately see various pieces of metadata (and a link to documentation), as well as the ultimate canonical UUID of the object:

✕

|

Everything that needs to use the prompt—Chat Notebooks, LLMPrompt, LLMResourceFunction, etc.—just works by accessing appropriate parts of the ResourceObject, so that for example the “hero image” (used for the persona icon) is retrieved like this:

✕

|

There’s a lot of important infrastructure that “comes for free” from the general Wolfram Resource System—like efficient caching, automatic updating, documentation access, etc. And things like LLMPrompt follow the exact same approach as things like NetModel in being able to immediately reference entries in a repository.

What’s in the Prompt Repository So Far

We haven’t been working on the Wolfram Prompt Repository for very long, and we’re just opening it up for outside contributions now. But already the Repository contains (as of today) about two hundred prompts. So what are they so far? Well, it’s a range. From “just for fun”, to very practical, useful and sometimes quite technical.

In the “just for fun” category, there are all sorts of personas, including:

There are also slightly more “practical” personas—like SupportiveFriend and SportsCoach too—which can be more helpful sometimes than others:

Then there are “functional” ones like NutritionistBot, etc.—though most of these are still very much under development, and will advance considerably when they are hooked up to tools, so they’re able to access accurate computable knowledge, external data, etc.

But the largest category of prompts so far in the Prompt Repository are function prompts: prompts which take text you supply, and do operations on it. Some are based on straightforward (at least for an LLM) text transformations:

There are all sorts of text transformations that can be useful:

Some function prompts—like Summarize, TLDR, NarrativeToResume, etc.—can be very useful in making text easier to assimilate. And the same is true of things like LegalDejargonize, MedicalDejargonize, ScientificDejargonize, BizDejargonize—or, depending on your background, the *Jargonize versions of these:

Some text transformation prompts seem to perhaps make use of a little more “cultural awareness” on the part of the LLM:

Some function prompts are for analyzing text (or, for example, for doing educational assessments):

Sometimes prompts are most useful when they’re applied programmatically. Here are two synthesized sentences:

✕

|

Now we can use the DocumentCompare prompt to compare them (something that might, for example, be useful in regression testing):

✕

|

There are other kinds of “text analysis” prompts, like GlossaryGenerate, CharacterList (characters mentioned in a piece of fiction) and LOCTopicSuggest (Library of Congress book topics):

There are lots of other function prompts already in the Prompt Repository. Some—like FilenameSuggest and CodeImport—are aimed at doing computational tasks. Others make use of common-sense knowledge. And some are just fun. But, yes, writing good prompts is hard—and what’s in the Prompt Repository will gradually improve. And when there are bugs, they can be pretty weird. Like PunAbout is supposed to generate a pun about some topic, but here it decides to protest and say it must generate three:

The final category of prompts currently in the Prompt Repository are modifier prompts, intended as a way to modify the output generated by the LLM. Sometimes modifier prompts can be essentially textual:

But often modifier prompts are intended to create output in a particular form, suitable, for example, for interpretation by an interpreter in LLMFunction, etc.:

So far the modifier prompts in the Prompt Repository are fairly simple. But once there are prompts that make use of tools (i.e. call back into Wolfram Language during the generation process) we can expect modifier prompts that are much more sophisticated, useful and robust.

Adding Your Own Prompts

The Wolfram Prompt Repository is set up to be a curated public collection of prompts where it’s easy for anyone to submit a new prompt. But—as we’ll explain—you can also use the framework of the Prompt Repository to store “private” prompts, or share them with specific groups.

So how do you define a new prompt in the Prompt Repository framework? The easiest way is to fill out a Prompt Resource Definition Notebook:

You can get this notebook here, or from the Submit a Prompt button at the top of the Prompt Repository website, or by evaluating CreateNotebook["PromptResource"].

The setup is directly analogous to the ones for the Wolfram Function Repository, Wolfram Data Repository, Wolfram Neural Net Repository, etc. And once you’ve filled out the Definition Notebook, you’ve got various choices:

Submit to Repository sends the prompt to our curation team for our official Wolfram Prompt Repository; Deploy deploys it for your own use, and for people (or AIs) you choose to share it with. If you’re using the prompt “privately”, you can refer to it using its URI or other identifier (if you use ResourceRegister you can also just refer to it by the name you give it).

OK, so what do you need to specify in the Definition Notebook? The most important part is the actual prompt itself. And quite often the prompt may just be a (carefully crafted) piece of plain text. But ultimately—as discussed elsewhere—a prompt is a symbolic template, that can include parameters. And you can insert parameters into a prompt using “template slots”:

(Template Expression lets you insert Wolfram Language code that will be evaluated when the prompt is applied—so you can for example include the current time with Now.)

In simple cases, all you’ll need to specify is the “pure prompt”. But in more sophisticated cases you’ll also want to specify some “outside the prompt” information—and there are some sections for this in the Definition Notebook:

Chat-Related Features is most relevant for personas:

You can give an icon that will appear in Chat Notebooks for that persona. And then you can give Wolfram Language functions which are to be applied to the contents of each chat cell before it is fed to the LLM (“Cell Processing Function”), and to the output generated by the LLM (“Cell Post Evaluation Function”). These functions are useful in transforming material to and from the plain text consumed by the LLM, and supporting richer display and computational structures.

Programmatic Features is particularly relevant for function prompts, and for the way prompts are used in LLMResourceFunction etc.:

There’s “function-oriented documentation” (analogous to what’s used for built-in Wolfram Language functions, or for functions in the Wolfram Function Repository). And then there’s the Output Interpreter: a function to be applied to the textual output of the LLM, to generate the actual expression that will be returned by LLMResourceFunction, or for formatting in a Chat Notebook.

What about the LLM Configuration section?

The first thing it does is to define tools that can be requested by the LLM when this prompt is used. We’ll discuss tools in another post. But as we’ve mentioned several times, they’re a way of having the LLM call Wolfram Language to get particular computational results that are then returned to the LLM. The other part of the LLM Configuration section is a more general LLMConfiguration specification, which can include “temperature” settings, the requirement of using a particular underlying model (e.g. GPT-4), etc.

What else is in the Definition Notebook? There are two main documentation sections: one for Chat Examples, and one for Programmatic Examples. Then there are various kinds of metadata.

Of course, at the very top of the Definition Notebook there’s another very important thing: the name you specify for the prompt. And here—with the initial prompts we’ve put into the Prompt Repository—we’ve started to develop some conventions. Following typical Wolfram Language usage we’re “camel-casing” names (so it’s "TitleSuggest" not "title suggest"). Then we try to use different grammatical forms for different kinds of prompts. For personas we try to use noun phrases (like "Cheerleader" or "SommelierBot"). For functions we usually try to use verb phrases (like "Summarize" or "HypeUp"). And for modifiers we try to use past-tense verb forms (like "Translated" or "HaikuStyled").

The overall goal with prompt names—like with ordinary Wolfram Language function names—is to provide a summary of what the prompt does, in a form that’s short enough that it appears a bit like a word in computational language input, chats, etc.

OK, so let’s say you’ve filled out a Definition Notebook, and you Deploy it. You’ll get a webpage that includes the documentation you’ve given—and looks pretty much like any of the pages in the Wolfram Prompt Repository. And now if you want to use the prompt, you can just click the appropriate place on the webpage, and you’ll get a copyable version that you can immediately paste into an input cell, a chat cell, etc. (Within a Chat Notebook there’s an even more direct mechanism: in the chat icon menu, go to Add & Manage Personas, and when you browse the Prompt Repository, there’ll be an Install button that will automatically install a persona.)

A Language of Prompts

LLMs fundamentally deal with natural language of the kind we humans normally use. But when we set up a named prompt we’re in a sense defining a “higher-level word” that can be used to “communicate” with the LLM—at the least with the kind of “harness” that LLMFunction, Chat Notebooks, etc. provide. And we can then imagine in effect “talking in prompts” and for example building up more and more levels of prompts.

Of course, we already have a major example of something that at least in outline is similar: the way in which over the past few decades we’ve been able to progressively construct a whole tower of functionality from the built-in functions in the Wolfram Language. There’s an important difference, however: in defining built-in functions we’re always working on “solid ground”, with precise (carefully designed) computational specifications for what we’re doing. In setting up prompts for an LLM, try as we might to “write the prompts well” we’re in a sense ultimately “at the mercy of the LLM” and how it chooses to handle things.

It feels in some ways like the difference between dealing with engineering systems and with human organizations. In both cases one can set up plans and procedures for what should happen. In the engineering case, however, one can expect that (at least at the level of individual operations) the system will do exactly as one says. In the human case—well, all kinds of things can happen. That is not to say that amazing results can’t be achieved by human organizations; history clearly shows they can.

But—as someone who’s managed (human) organizations now for more than four decades—I think I can say the “rhythm” and practices of dealing with human organizations differ in significant ways from those for technological ones. There’s still a definite pattern of what to do, but it’s different, with a different way of going back and forth to get results, different approaches to “debugging”, etc.

How will it work with prompts? It’s something we still need to get used to. But for me there’s immediately another useful “comparable”. Back in the early 2000s we’d had a decade or two of experience in developing what’s now Wolfram Language, with its precise formal specifications, carefully designed with consistency in mind. But then we started working on Wolfram|Alpha—where now we wanted a system that would just deal with whatever input someone might provide. At first it was jarring. How could we develop any kind of manageable system based on boatloads of potentially incompatible heuristics? It took a little while, but eventually we realized that when everything is a heuristic there’s a certain pattern and structure to that. And over time the development we do has become progressively more systematic.

And so, I expect, it will be with prompts. In the Wolfram Prompt Repository today, we have a collection of prompts that cover a variety of areas, but are almost all “first level”, in the sense that they depend only on the base LLM, and not on other prompts. But over time I expect there’ll be whole hierarchies of prompts that develop (including metaprompts for building prompts, etc. ) And indeed I won’t be surprised if in this way all sorts of “repeatable lumps of functionality” are found, that actually can be implemented in a direct computational way, without depending on LLMs. (And, yes, this may well go through the kind of “semantic grammar” structure that I’ve discussed elsewhere.)

But as of now, we’re still just at the point of first launching the Wolfram Prompt Repository, and beginning the process of understanding the range of things—both useful and fun—that can be achieved with prompts. But it’s already clear that there’s going to be a very interesting world of prompts—and a progressive development of “prompt language” that in some ways will probably parallel (though at a considerably faster rate) the historical development of ordinary human languages.

It’s going to be a community effort—just as it is with ordinary human languages—to explore and build out “prompt language”. And now that it’s launched, I’m excited to see how people will use our Prompt Repository, and just what remarkable things end up being possible through it.